The sheer volume of content generated on the internet is astounding: 500 million tweets daily, 50 billion Instagram photos, and 700,000 hours of YouTube video each day. The World Economic Forum estimates that 463 exabytes of data are generated daily, with one exabyte equaling one billion gigabytes. This digital deluge presents an unprecedented challenge for content moderation.

As we find ourselves at the intersection of free expression and responsible oversight, it’s evident that traditional content moderation methods are struggling to keep pace with the massive influx of information. In this critical moment, AI emerges as a promising solution to manage vast amounts of information. How can AI tackle the challenge of moderating content on a large scale, while safeguarding the content diversity?

The Evolution of AI in Content Moderation: From Rules to Machine Learning

The journey of content moderation began with the straightforward rule-based systems that filtered content using predefined keywords. However, these systems were rigid, easily bypassed through alternate spellings, and couldn’t adapt to evolving content. They were slow, costly, and limited by human moderators’ capacity, allowing harmful material to persist. Human moderators also faced challenges like errors, bias, and scalability. Most importantly, these methods are not scalable to handle the vast volume of content.

As the limitations of rule-based systems became increasingly apparent, the need for more sophisticated approaches led to the integration of AI and machine learning (ML) into content moderation. Unlike their predecessors, AI-driven systems learn from data, identify patterns, and predict content that needs moderation, significantly improving accuracy and efficiency in handling vast amounts of content.

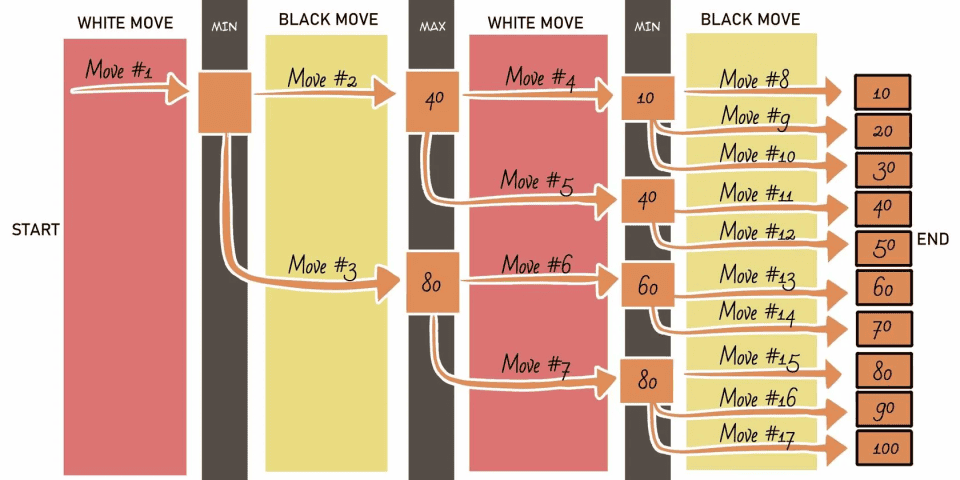

To define your content moderation strategy, it’s important to understand the different stages of content moderation:

Pre-moderation: Moderators review content before it is made public, ensuring it complies with guidelines and protecting the community from harmful material.

Post-moderation: Content is published in real-time, but flagged content is reviewed after the fact by moderators or AI, ensuring harmful material is addressed later while allowing immediate user interaction.

Reactive moderation: This approach relies on community members to flag inappropriate content for review. It serves as an additional safeguard, often used in combination with other methods, especially in smaller, tightly knit communities.

Distributed moderation: Users rate content, and the average rating determines if it aligns with the community’s rules. While this method encourages user engagement and can boost participation, it poses legal and branding risks and doesn’t guarantee real-time posting.

Various AI/ML models have been developed to enhance the efficiency of content moderation processes. These models sift through and analyze content, identifying and addressing harmful or inappropriate material with greater speed and accuracy.

Hash Matching: It identifies harmful content by creating a unique digital fingerprint, or hash, for each piece using cryptographic hash functions. When harmful content, like explicit images, is detected, its hash is stored in a database. As new content is uploaded, its hash is compared against the database, and if a match is found, the content is flagged or removed. For example, social media platforms use this method to block the re-upload of flagged content, such as child exploitation images.

Keyword Filtering: This involves scanning content for specific words or phrases linked to harmful behavior, such as hate speech or violence. A list of flagged keywords is used to analyze new content, and if detected, the content is flagged for human review. For example, an online forum might flag comments with words like “kill” or “hate” for moderator attention.

Natural Language Processing (NLP): NLP encompasses techniques that enable machines to understand and interpret human language, including tokenization, part-of-speech tagging, and sentiment analysis. The process begins with pre-processing text to break it into tokens and tag parts of speech. ML models, trained on labeled datasets, classify content as harmful or benign. Advanced NLP techniques, such as sentiment analysis, assess the emotional tone of the text. For example, a content moderation system might use NLP to analyze comments on a video platform, flagging those with negative sentiment for further review.

Unsupervised Text-Style Transfer: It adapts text into different styles without labeled data. A model learns from a large text corpus to generate new text in a different style while preserving meaning. For instance, it might rephrase harmful comments into neutral language, like changing “You are stupid!” to “I disagree with your opinion.”

Attention-Based Techniques: They help models focus on key parts of input data for better context understanding. By adding an attention layer to a neural network, the model weighs the importance of words. For example, in analyzing “I think that joke was funny, but it could be taken the wrong way,” the mechanism might focus on “could be taken the wrong way” to assess potential harm.

Object Detection and Image Recognition: These methods are key for moderating visual content by detecting and locating objects in images and videos. Trained on datasets with labeled images, models learn to identify features distinguishing different objects. When new content is processed, the model flags predefined objects, such as weapons or hate symbols, to identify and address violent or harmful material.

Recurrent Neural Networks (RNNs): RNNs are valuable for moderating video content as they analyze sequences of frames to understand the context and narrative. By maintaining information from previous frames, RNNs can detect patterns indicative of harmful behavior, such as bullying or harassment.

Metadata Analysis: Metadata offers details on user interactions, profiles, and engagement metrics. Analyzing this data helps identify behavioral patterns, such as frequent harmful posts, enabling platforms to prioritize and review content from users with a history of negative interactions.

URL Matching: It helps prevent harmful links by checking user-submitted URLs against a database of known threats like phishing sites or malware. For example, a messaging app can automatically block links to known phishing sites to protect users from scams.

Facebook has faced content moderation challenges, notably during the Christchurch attacks and the Cambridge Analytica scandal. In response, it introduced hash-matching to block reuploads of harmful videos by comparing them against a flagged content database. Facebook now uses AI tools like DeepText, FastText, XLM-R, and RIO to detect 90% of flagged content, with the rest reviewed by human moderators.

In one quarter of 2022, YouTube removed 5.6 million videos, mostly flagged by AI. Algorithms identified nearly 98% of videos removed for violent extremism. YouTube’s Content ID system uses hash-matching for copyright enforcement, while ML classifiers detect hate speech, harassment, and inappropriate language.

AI-powered content moderation offers quick detection and removal of harmful content, enhancing platform safety and user experience. It handles large data volumes in real-time, operates 24/7, and reduces bias by spotting patterns that humans might miss. However, AI’s effectiveness depends on the quality of its training data, which can introduce biases. Therefore, human-AI collaboration is essential to ensure fair and accurate content moderation.

Human-AI Collaboration: Navigating Bias, Context, and Accuracy

While AI is a powerful tool for content moderation, it’s not flawless. Human judgment is crucial for understanding context and mitigating bias. AI can reflect biases from its training data, potentially targeting certain groups unfairly. It also struggles with language nuances and cultural references, leading to false positives or negatives. Human moderators excel in interpreting these subtleties and ensuring fair content review.

To address these challenges, many platforms have adopted a hybrid approach, combining the strengths of AI with the discernment of human moderators. In this collaborative model, AI handles the initial filtering of content, flagging potential violations for human review. Human moderators then step in to assess the context and make the final decision. This partnership helps to balance the speed and scalability of AI with the nuanced understanding that only humans can provide.

Ethical Considerations in AI-Driven Content Moderation

As AI continues to play an increasingly prominent role in content moderation, it raises ethical questions that must be carefully considered. One of the most pressing concerns is the issue of privacy. AI-driven moderation involves analyzing vast amounts of user-generated content, which can include personal information and private communications. The potential for misuse or overreach in the name of moderation is a significant concern, particularly when it comes to balancing the need for safety with the right to privacy.

Another ethical consideration is the potential for censorship. AI systems, particularly those with limited transparency, can sometimes make decisions that lack accountability. This can lead to the suppression of legitimate speech, stifling free expression. Ensuring that AI-driven content moderation is transparent and accountable is crucial to maintaining the trust of users and safeguarding democratic values.

AI has undoubtedly transformed content moderation, evolving from basic rule-based systems to advanced machine learning models. While it plays a key role in maintaining online safety, AI alone isn’t perfect. Collaboration with human moderators is essential to address issues like bias, context, and accuracy. Moving forward, it’s crucial to focus on the ethical aspects of AI moderation, ensuring it remains fair, transparent, and respectful of user rights.

Want to explore how AI can transform your business? Contact Random Walk to learn more about AI and our AI integration services. Let’s unlock the future together!