Implementing computer vision is a journey through complex challenges—from capturing the unpredictability of human expressions to handling diverse lighting and environmental conditions. To advance these systems, it’s crucial to develop extensive, nuanced datasets that reflect a wide range of real-world scenarios. By strategically choosing models tailored to specific needs and rigorously testing for reliability, we can build computer vision systems that overcome these obstacles and set new standards in accuracy and adaptability, paving the way for powerful, real-world applications.

When Hardware Falls Short: The Hidden Costs to Computer Vision

Effective computer vision relies on robust hardware, as AI-driven tasks need significant processing power for real-time, data-heavy operations. While cloud platforms provide scalable resources, they often fall short for instant processing needs. Key hardware issues, like low-quality cameras and processors, can create critical blind spots if not set up properly. Addressing these gaps calls for high-definition cameras with Real-Time Streaming Protocol (RTSP) for live video streaming, high resolution, and higher frame rates, especially in low-light conditions. Cameras like the Raspberry Pi Camera Module, Intel RealSense Depth Camera, and Allied Vision models bring advanced sensors and real-time processing, making them ideal for reliable computer vision systems.

Organizations should invest in hardware acceleration like CPUs (Central Processing Units) and GPUs (Graphics Processing Units) to meet the computational needs of machine learning and deep learning in computer vision tasks. CPUs are excellent for handling complex scheduling and serial computations, ensuring optimal performance. In contrast, GPUs are essential for their parallel processing capabilities, which speed up image processing and analysis by efficiently managing large datasets. This enables faster and more accurate results in real-time computer vision applications. GPUs such as Nvidia GeForce GTX and AMD Radeon HD enable faster and more accurate processing in real-time applications of computer vision.

To enhance processing speed and reduce computational time, organizations can utilize hardware accelerators like field-programmable gate arrays (FPGAs). These integrated circuits offer customizable hardware architecture, low power consumption, and cost-effectiveness. FPGAs excel at real-time processing of computer vision tasks such as object detection and image classification, thanks to their ability to perform parallel processing efficiently. ASIC (Application-Specific Integrated Circuit) processors are specialized microchips tailored for specific tasks like computer vision, providing high performance, power efficiency, low latency, and customizable features enabling real-time performance in time-sensitive applications such as autonomous vehicles or surveillance systems. Vision Processing Units (VPUs) are an example of ASICs.

Why Data Quality Matters: Avoiding Pitfalls in Computer Vision

Computer vision systems require large volumes of high-quality annotated training data to perform effectively. While the volume and variety of data are expanding rapidly, not all data records are of high quality.

The major challenges in computer vision dataset training and processing include inaccurate labels such as loose bounding boxes, mislabeled images, missing labels, and unbalanced data leading to bias. Imbalanced datasets make it harder for the model to predict accurately, while noisy data with errors confuses the model, and overfitting causes it to perform poorly on new data. For example, a model trained to recognize apples and oranges might focus too much on details like a green spot on an apple or a bump on an orange, leading it to mistake a tomato for an apple.

These issues can lead to algorithmic struggles in correctly identifying objects in images and videos. Recent research led by MIT shed slight on systematic errors in widely used machine learning (ML) test sets. Examining 10 major datasets, including ImageNet and Amazon's reviews dataset, the study revealed an average error rate of 3.4%. Notably, ImageNet, a cornerstone dataset for image recognition, exhibited a 6% error rate. Therefore, meticulous annotation work is crucial to providing accurate labels and annotations tailored to specific use cases and problem solving objectives in computer vision projects.

Another solution involves using synthetic datasets, which are artificially generated data that mimic real-world scenarios, to complement real-world data in computer vision. These datasets diversify the dataset and reduce bias by generating additional samples, enabling accurate labeling in a controlled environment for high-quality annotations essential in model training. Synthetic datasets address imbalances by creating samples for underrepresented classes and filling gaps in real-world data with simulated challenging scenarios. To enhance accuracy, mixed datasets containing both real and synthetic samples are preferred, and future efforts may concentrate on improving program synthesis techniques for larger and more versatile synthetic datasets.

Improper Model Selection: A Barrier to Effective Computer Vision

Model selection in machine learning (ML) is the process of choosing the best model from a group of candidates to solve a specific problem. This involves considering factors like model performance, training time, and complexity. Its failure can be attributed to various factors, including hardware constraints, deployment environment, data quality or volume inadequacies, and the computing resources demanded by the model. Moreover, the scalability of these models can become prohibitively expensive. Issues pertaining to accuracy, performance, and the sustainability of custom architectures further compound the challenges faced by organizations.

Rather than seeking perfection, the goal is to select a model that best fits the task. This requires evaluating several models on a dataset to find one that aligns with project needs. Techniques like probabilistic measures and resampling aid in decision-making by assessing model performance and complexity. Probabilistic measures evaluate models based on their performance and complexity, penalizing overly complex models to reduce overfitting. Resampling techniques, on the other hand, help gauge model performance on new data by repeatedly splitting the dataset into training and testing sets to calculate an average performance score, ensuring reliability across varied data.

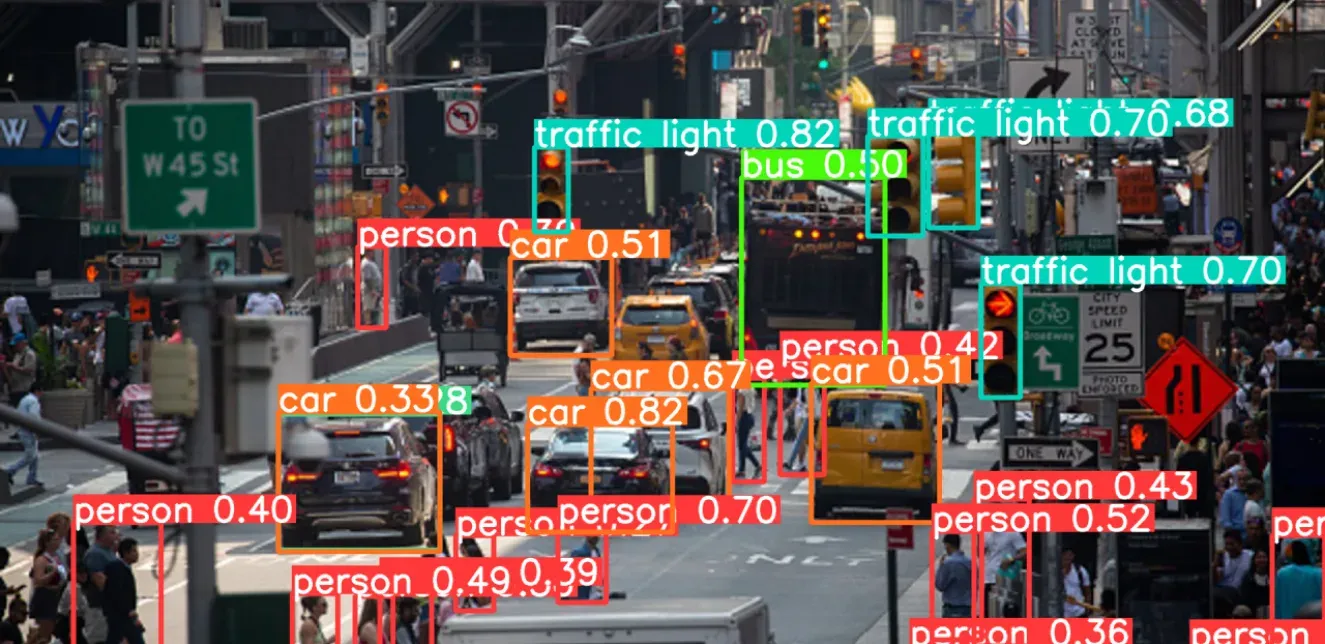

A study examined the efficiency of three computer vision models—YOLOV2, Google Cloud Vision, and Clarifai in analyzing visual brand-related User Generated Content from Instagram. Results indicated that while Google Cloud Vision excelled in object detection accuracy, Clarifai provided more useful and varied subjective labels for interpreting brand portrayal. Conversely, YOLOV2 was found to be less informative due to its limited output labels.

For hardware limitations, Edge AI can be used to relocate machine-learning tasks from the cloud to local computers, enabling on-device processing and safeguarding sensitive data. Choosing the right computer vision model depends on deployment needs, such as using DenseNet for accurate cloud-based medical image analysis. To address data limitations, Generative Adversarial Networks (GANs) can expand datasets artificially, while pre-trained models like ResNet can be fine-tuned with limited data. For scaling models, lightweight options like MobileNet or model compression techniques are viable solutions.

While implementing computer vision presents challenges, it also offers immense opportunities for innovation and growth. With persistence and strategic approaches, businesses can overcome these hurdles, fully harnessing the power of computer vision by investing in advanced hardware, refining datasets, and selecting the right models for their specific needs.

With the expertise of Random Walk in AI integration services, you can navigate these challenges and gain the full potential of computer vision technology. Explore the AI services from Random Walk that can transform your business. Visit our website for customized solutions, and contact us to schedule a one-on-one consultation to learn more about our products.