At Random Walk, we’re always curious about the ways humans and technology interact, especially when it comes to interpreting and visualizing information. Our latest challenge was both fascinating and revealing: Can AI tools outperform humans in creating a map based on a story?

We began with a passage from a book that provided a detailed description of a landscape, landmarks, and directions:

_At 7:35 A.M. Ishigami left his apartment as he did every weekday morning. Just before stepping out onto the street, he glanced at the mostly full bicycle lot, noting the absence of the green bicycle. Though it was already March, the wind was bitingly cold. He walked with his head down, burying his chin in his scarf. A short way to the south, about twenty yards, ran Shin-Ohashi Road. From that intersection, the road ran east into the Edogawa district, west towards Nihonbashi. Just before Nihonbashi, it crossed the Sumida River at the Shin-Ohashi Bridge. The quickest route from Ishigami’s apartment to his workplace was due south. It was only a quarter mile or so to Seicho Garden Park. He worked at the private high school just before the park. He was a teacher. He taught math. Ishigami walked south to the red light at the intersection, then he turned right, towards Shin-Ohashi Bridge._

Using this description, we were tasked with manually sketching a map. It was a test of our ability to translate words into a visual representation, relying on our interpretation of the narrative. Then came the second part of the experiment: feeding the same description into AI tools like ChatGPT, Copilot, Ideogram, and Mistral AI, asking them to generate their versions of the map.

The Results: A Mix of Human and AI Strengths

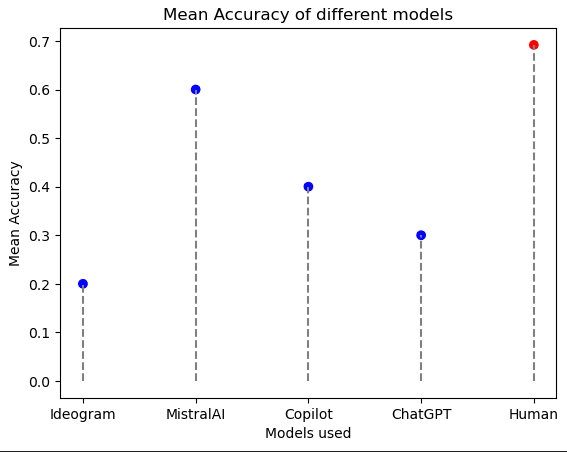

Here’s how the AI models and humans performed:

ChatGPT: 30% accuracy with 10 samples

Copilot: 20% accuracy with 10 samples

Mistral AI: 60% accuracy with 10 samples

Ideogram: 20% accuracy with 10 samples

Humans: 69.2% accuracy with 26 samples

To ensure a fair comparison, we adjusted the human sample size to align with the AI models. This adjustment revealed that while AI tools like Mistral AI excelled with a 60% accuracy rate, humans were still quite competitive, achieving an accuracy of 69.2%. ChatGPT and Copilot lagged behind, with Ideogram providing visually appealing but less accurate 3D maps.

Interestingly, when we randomly selected 10 samples from the 26 human answers for the sample size to align with the AI models, the mean accuracy jumped to 70%. After sampling 10,000 times, the accuracy values ranged from 30% to 100%, highlighting the variability in human interpretation and the potential for high accuracy under certain conditions.

What We Learned: Combining Human and AI Capabilities

The results were fascinating. Each AI tool produced maps with varying levels of precision and different styles of interpretation, showcasing how AI processes and analyzes information uniquely.

Interestingly, despite the advancements in AI, humans still demonstrated a notable level of accuracy. This outcome underscores an important point: While AI can provide precise and logical interpretations, the human touch remains crucial. The nuances and contextual understanding that humans bring to the table can complement AI’s strengths, making the combination of both even more powerful.

So, what does this mean for businesses and individuals seeking to resolve complex challenges? It’s a reminder that while AI is an invaluable tool, human insight and intuition are equally important. By leveraging the strengths of both, we can achieve better outcomes, whether it’s in mapping a story or tackling more intricate problems.

A Path Forward: Enhancing Problem-Solving with Human-AI Collaboration

As we continue to explore the intersection of human intuition and AI’s computational power, challenges like these provide valuable insights. They demonstrate how AI can complement our skills, offering unique solutions and perspectives that might not come as easily to us. It’s an exciting glimpse into the future of collaborative problem-solving.

As we reflect on this experiment, it’s clear that while AI brings incredible precision and unique perspectives, human intuition and experience still play a vital role. The real potential lies in harnessing the strengths of both, allowing AI to enhance our capabilities rather than replace them. By working together, we can navigate complex challenges with a blend of creativity and accuracy that neither could achieve alone. This partnership between human ingenuity and AI technology is not just the future of problem-solving—it’s the key to unlocking new levels of innovation and success.