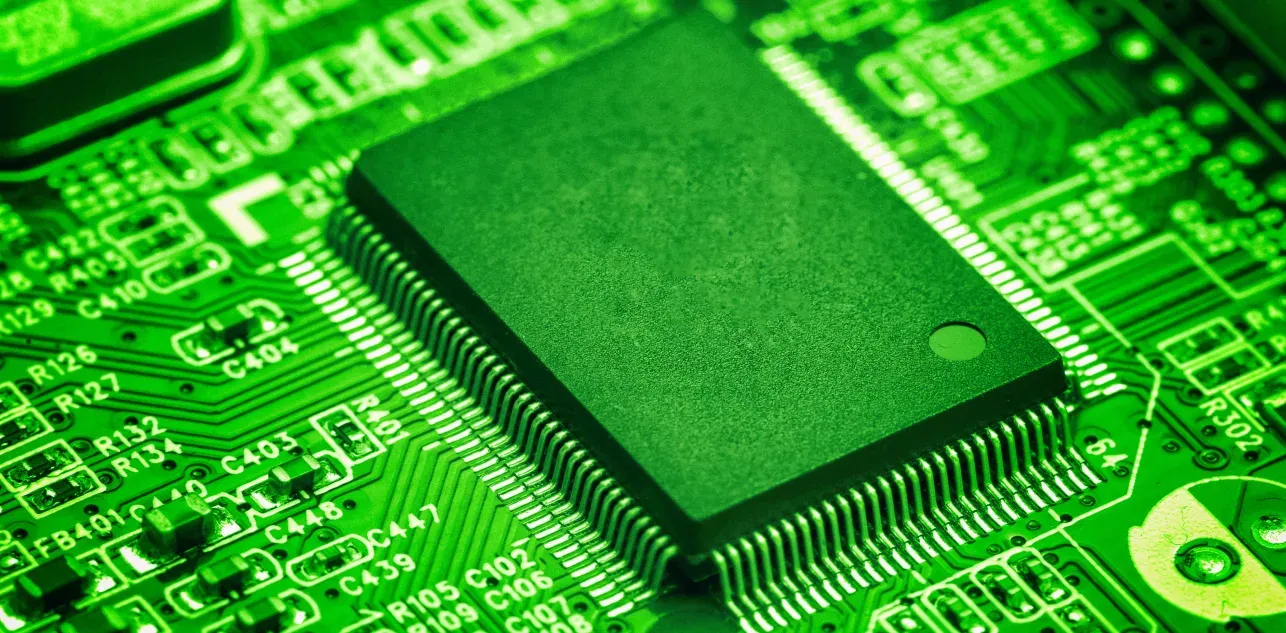

The Raspberry Pi, launched in 2012, has entered the vocabulary of all doers and makers of the world. It was designed as an affordable, accessible microcomputer for students and hobbyists. Over the years, Raspberry Pi has evolved from a modest credit card-sized computer into a versatile platform that powers innovations in fields as diverse as home economics to IoT, AI, robotics and industrial automation. Raspberry Pis are single board computers that can be found in an assortment of variations with models ranging from anywhere between $4 to $70. Here, we’ll trace the journey of the Raspberry Pi’s evolution and explore some of the innovations that it has spurred with examples and code snippets.

The Early Days: A Learning Platform

Founder Eben Upton shared that Raspberry Pi began as a foundation aimed at encouraging young students to pursue computer science as a major. The first generation of the Raspberry Pi was built to teach programming and computing to a new generation. It included basic GPIO (General-Purpose Input/Output) pins, which allowed users to interact with external devices. Even today, GPIO programming remains at the core of many Raspberry Pi projects. Thanks to the Raspberry Pi, the days of computer hardware being a black box of information are gone as a new generation tinkers around with the single board computer hardware. Even the founder of Raspberry Pi is shocked at the things people do with their RPis. “People do not do with their Raspberry Pi what I expected them to do. I expected people to write computer games because that’s what I did when I was a child. In practice, people build robots. The interesting thing: kids find moving atoms around in the world much more interesting than moving pixels around on the screen.”

Here's a simple Python code snippet to blink an LED using the GPIO pins, showcasing the foundation of physical computing with the Raspberry Pi:

import RPi.GPIO as GPIO

import time

# Set up GPIO

GPIO.setmode(GPIO.BCM)

GPIO.setup(18, GPIO.OUT) # Pin 18 as output

# Blink the LED

for i in range(5):

GPIO.output(18, GPIO.HIGH)

time.sleep(1)

GPIO.output(18, GPIO.LOW)

time.sleep(1)

# Clean up GPIO

GPIO.cleanup()This basic example of using GPIO to control hardware paved the way for a massive community of makers, educators, and hobbyists who leveraged the Pi's capabilities for various projects.

Raspberry Pi as a Platform for IoT

With each iteration, the Raspberry Pi’s capabilities expanded. The addition of Wi-Fi and Bluetooth in the Raspberry Pi 3 marked a turning point, making it suitable for IoT projects. Its compact size, affordability and flexibility enabled collecting, processing, and transmitting data from sensors and devices in smart home systems, industrial automation, and environmental monitoring setups. The Pi became an ideal candidate for IoT applications, enabling users to connect sensors and control devices remotely. Using the Raspberry Pi as an IoT hub allows developers to implement data-driven applications that communicate over protocols like MQTT and HTTP, facilitating real-time control and remote monitoring across devices.

At Random Walk we pioneered an initiative to monitor sound pollution across the globe. You can monitor the network here - Noise Monitoring. You can read more about the set up on our blog about real time decibel mapping - Monitoring Sound Pollution: An Innovative Approach with Real-Time Decibel Mapping. Below is the code to set up your own noise sensor and become a part of this global network of noise monitoring devices.

#include <ESP8266WiFi.h>

#include <ESP8266HTTPClient.h>

#include <ArduinoJson.h>

#include <TimeLib.h>

#include <WiFiUdp.h>

#include <time.h>

const char* WIFI_SSID = ""; >>>> enter your wifi ssid / wifi name here (within the double quotes)

const char* WIFI_PASSWORD = ""; >>>> enter wifi password here (within the double quotes)

WiFiClient wifiClient;

HTTPClient http;

const int sampleWindow = 50;

const float referenceVoltage = 0.0048;

const int delayTime = 1000;

const double latitude = ; //enter the latitude

const double longitude = ; //enter the longitude

const String device_id = "XXXXXX"; //replace XXXX with your device id

String getCurrentDateTimeIST(); // New combined date and time function

void setup() {

Serial.begin(115200);

connectToWiFi();

configTime(0, 0, "pool.ntp.org", "time.nist.gov");

while (time(nullptr) < 8 * 3600 * 2) {

Serial.print(".");

delay(500);

}

Serial.println("

Time synchronized");

}

void loop() {

if (WiFi.status() != WL_CONNECTED) {

connectToWiFi();

}

float decibels = measureDecibels();

String currentDateTimeIST = getCurrentDateTimeIST(); // Get combined date and time

DynamicJsonDocument doc(256);

doc["deviceId"] = device_id;

doc["decibelReading"] = decibels;

doc["timestamp"] = currentDateTimeIST; // Send the combined timestamp

doc["latitude"] = latitude;

doc["longitude"] = longitude;

String output;

serializeJson(doc, output);

sendDataToServer(output);

delay(delayTime);

}

void connectToWiFi() {

Serial.print("Connecting to Wi-Fi");

WiFi.begin(WIFI_SSID, WIFI_PASSWORD);

while (WiFi.status() != WL_CONNECTED) {

delay(500);

Serial.print(".");

}

Serial.println("

Connected to Wi-Fi");

}

float measureDecibels() {

unsigned long startMillis = millis();

unsigned long endMillis = startMillis + sampleWindow;

float peakToPeak = 0;

int signalMax = 0;

int signalMin = 1023;

while (millis() < endMillis) {

int sensorValue = analogRead(A0);

if (sensorValue > signalMax) {

signalMax = sensorValue;

}

if (sensorValue < signalMin) {

signalMin = sensorValue;

}

}

peakToPeak = signalMax - signalMin;

float voltage = peakToPeak * (3.3 / 1024.0);

float rmsVoltage = voltage / sqrt(2);

float decibels = 20 * log10(rmsVoltage / referenceVoltage);

if (rmsVoltage < referenceVoltage * 0.01) {

decibels = 0;

}

Serial.println("Decibels: " + String(decibels));

return decibels;

}

void sendDataToServer(String data) {

Serial.println("Attempting to send data: " + data);

http.begin(wifiClient, "https://172.16.0.95:5000/api/v1/datalog");

http.addHeader("Content-Type", "application/json");

int httpResponseCode = http.POST(data);

Serial.println("HTTP Response code: " + String(httpResponseCode));

if (httpResponseCode > 0) {

String response = http.getString();

Serial.println("Server Response: " + response);

} else {

Serial.println("Error on sending POST: " + http.errorToString(httpResponseCode));

}

http.end();

Serial.println("HTTP connection closed");

}

}AI and Edge Computing with Raspberry Pi

The Raspberry Pi 4, with its increased RAM (up to 8GB) and powerful quad-core processor, opened doors to AI and machine learning (ML) applications. The addition of USB 3.0 allows for faster data transfer, making it suitable for edge computing applications where real-time data processing is crucial. These upgrades enable faster data processing, reduced latency, and real-time decision-making, essential for deploying AI models on the edge.

The Random Walk team has brought AI to new heights by running an LLM agent on a Raspberry Pi 4 - Jarvis. Learn the story of how we made this small device power mighty AI in our experiment, Tiny Pi, Mighty AI.

Using pre-trained models with frameworks like TensorFlow Lite, developers can now run image recognition, natural language processing, and predictive analytics on the Pi 4, creating low-cost, scalable solutions for applications in smart cities, industrial IoT, and remote monitoring, all without relying on cloud-based resources. Due to an ongoing global chip shortage, the Raspberry Pi Foundation has been facing challenges in keeping up with demand for its popular, affordable microcomputers. In an interview with The Verge, CEO Eben Upton shared insights into how Raspberry Pi has managed to navigate this crisis, addressing limited production capacity, increased lead times, and price adjustments. Despite these setbacks, Upton highlighted the sustained enthusiasm for the platform among DIY enthusiasts, educators, and developers, underscoring Raspberry Pi’s commitment to accessibility and potential future advancements once supply chains stabilize.

The Raspberry Pi’s evolution from a simple educational tool to a powerful IoT and AI platform underscores its adaptability and relevance. Its journey aligns with technological advances, fostering innovations in countless fields. Whether you’re a student, developer, or industry professional, the Raspberry Pi provides an accessible entry point into the world of edge computing and innovation.