Text-to-speech (TTS) technology has evolved significantly in the past few years, enabling one to convert simple text to spoken words with remarkable accuracy and naturalness. From simple robotic voices to sophisticated, human-like speech synthesis, models offer specialized capabilities applicable to different use cases. In this blog, we will explore how different TTS models generate speech from text as well as compare their capabilities, models explored include MARS-5, Parler-TTS, Tortoise-TTS, MetaVoice-1B, Coqui TTS among others.

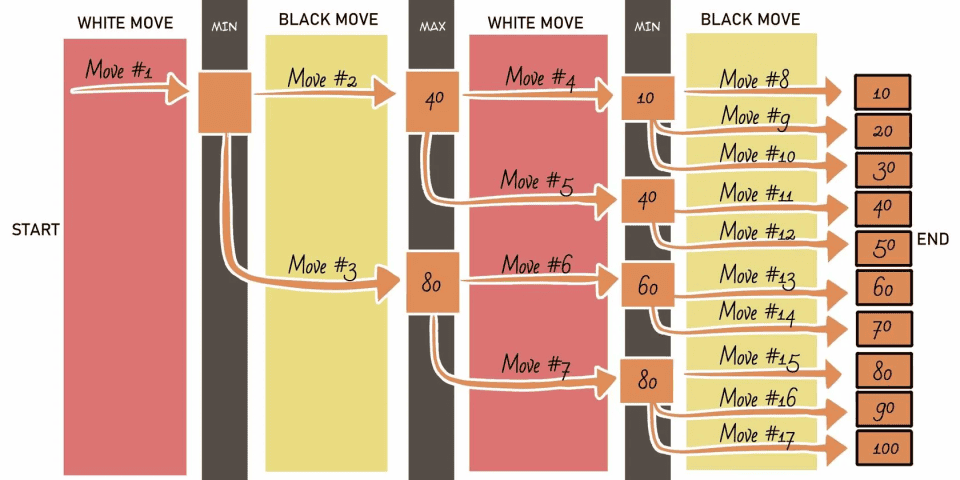

The TTS process generally involves several key steps discussed later in detail: input text and reference audio, text processing, voice synthesis and then the final audio is outputted. Some models enhance this process by supporting few-shot or zero-shot learning, where a new voice can be generated based on minimal reference audio. Let's delve into how some of the leading TTS models perform these tasks.

MARS-5: Few-Shot Voice Cloning

MARS5 is primarily a few-shot model by CAMB-AI for voice cloning and text-to-speech (TTS) tasks. MARS5 can perform high-quality voice cloning with as little as 5-12 seconds of reference audio. This makes it a few-shot model, as it doesn't require vast amounts of training data from a specific voice to clone it. MARS5 uses an innovative two-stage architecture combining Auto-Regressive (AR) and Non-Auto-Regressive (NAR) models, with a Diffusion Denoising Probabilistic Model (DDPM) for fine-tuning. This allows it to generate high-quality speech with a balance between speed and accuracy. It supports both fast, shallow cloning for quick results and deeper, higher-quality cloning that requires a transcript of the reference audio for optimal speech generation.

Process:

Input Text: You provide the text that you want to be converted into speech. This text is the message or sentence that will be voiced.

Reference Audio: You upload a sample of audio (reference audio) that serves as a guide for the speaking style, tone, and voice characteristics you want the output to mimic.

Text Processing: The model processes the input text, breaking it down into phonetic or linguistic units. This step prepares the text to be synthesized into speech.

Audio Embedding Extraction: From the reference audio, the model extracts key features, like pitch, rhythm, intonation, and voice timbre. These are used to shape how the synthesized voice should sound.

Text-to-Speech Generation: Using both the processed text and the reference audio features, the model generates the new speech. It combines the content from the text with the style and voice features from the reference audio.

Model Output: The output is a synthesized speech audio file that reflects the input text spoken in a voice similar to the reference audio.

Training (Behind the Scenes): The model is trained using a large dataset of paired text and audio samples. During training, it learns to map text to speech while also capturing the nuances of different voices, styles, and accents. The model learns to reproduce various voice styles when given reference audio.

A few examples of text to speech from the MARS-5 model are shown below:

Parler-TTS: Lightweight and Customizable

Parler TTS is a lightweight, open-source text-to-speech model with a focus on efficiency and simplicity. It can generate speech that closely mimics a specific speaker's voice, capturing key elements like pitch, speaking style, and gender. As an open-source model, Parler TTS is highly customizable. Users have access to datasets, training codes, and model weights, allowing them to modify and fine-tune the model to their needs.

Tortoise-TTS: Ultra-realistic Human Voices

Tortoise TTS is a highly advanced text-to-speech model, known for producing ultra-realistic speech. It is also one of the leading models for generating extremely natural-sounding speech. It focuses on capturing subtle aspects of human speech, such as emotions, intonation, pauses, and pronunciation, making it ideal for creating human-like voices in TTS applications. Tortoise TTS is capable of cloning voices from small audio samples (few-shot learning). It can generate highly accurate reproductions of a speaker’s voice with minimal reference material. Tortoise TTS is computationally demanding. Its high-quality outputs come at the cost of slower processing speeds compared to other lightweight models.

Metavoice-1B: Multilingual

MetaVoice-1B is a powerful and open-source few-shot text-to-speech (TTS) model designed for voice cloning and high-quality speech synthesis. It operates with 1.2 billion parameters and was trained on over 100,000 hours of speech data. MetaVoice supports zero-shot voice cloning, particularly for American and British voices, requiring only a 30-second audio reference. For other languages or accents, it can be fine-tuned with as little as 1 minute of training data. One of its primary strengths is the ability to generate emotionally expressive speech, capturing subtle shifts in tone and rhythm. MetaVoice can be fine-tuned for different languages and dialects, enabling versatile multilingual applications. The model uses a hybrid architecture that combines GPT-based token prediction with multi-band diffusion to generate high-quality speech from EnCodec tokens, cleaned up with post-processing.

The process of generating speech from text from MetaVoice-1B includes the following steps:

Input Text & Reference Voice: You provide text for the model to say and upload a short reference audio clip that contains the voice you want to mimic.

Text & Voice Feature Extraction: The model processes the text to understand its structure and extracts unique voice characteristics (like pitch and accent) from the reference audio.

Voice Synthesis: The model combines the text and the extracted voice features to generate speech that sounds like the reference voice, but it says the new text.

Generate Audio Output: The model outputs an audio file with the input text spoken in the cloned voice of the reference audio.

Training Behind the Scenes: MetaVoice1b is trained on massive datasets of text-audio pairs, learning to map text to speech while copying voice patterns from examples.

The results of using Metavoice-1B on the sentences can be seen here:

Coqui TTS: High-Quality Multilingual Synthesis

Coqui TTS is an advanced text-to-speech (TTS) technology designed for high-quality, natural-sounding speech synthesis. Coqui TTS is built on machine learning models to convert text into spoken words, focusing on delivering lifelike and versatile voice outputs. Coqui TTS is known for its realistic voice synthesis, making it suitable for applications ranging from virtual assistants to audiobook narration. It supports multiple languages and accents. Coqui TTS requires substantial computational resources, particularly for running high-quality models.

Style-TTS can be used to mimic emotional tones, intonations and accents. We used Style-TTS to generate voice in style of Daniel Radcliffe and the results can be found here:

Other models explored include XTTS and OpenVoice.

XTTS: Zero-Shot Voice Synthesis

XTTS is an open-source multilingual voice synthesis model, part of the Coqui TTS Library. XTTS supports 17 languages, including widely spoken ones like English, Spanish, and Mandarin, as well as additional languages like Hungarian and Korean. The model is designed to perform zero-shot voice synthesis, allowing it to generate speech in a new language without needing additional training data for that language. The model is built on a sophisticated architecture, leveraging VQ-VAE (Vector Quantized Variational Autoencoder) technology for effective audio signal processing. This is particularly advantageous for creating voices that sound natural in multiple languages without extensive data requirements. The latest version, XTTS-v2, boasts enhancements in prosody and overall audio quality. This leads to more natural-sounding speech. XTTS was trained on a comprehensive dataset comprising over 27,000 hours of speech data from various sources, including public datasets like Common Voice and proprietary datasets.

OpenVoice TTS: Cross-Lingual Voice Cloning

OpenVoice is an advanced text-to-speech (TTS) system developed by MyShell and MIT. It excels at accurately replicating the tone color of a reference speaker, allowing it to generate speech that sounds natural and authentic. One of the standout features is Zero-shot Cross-lingual voice cloning which is to clone voices across languages without needing the reference voice in the target language. The v2 has significant improvements in audio quality through updated training strategies, ensuring clearer and more natural-sounding outputs. OpenVoice V2 supports multiple languages natively, including English, Spanish, French and Chinese.

There are a plethora of models to choose from depending on the use case and application a user has in mind. The journey of evolution of TTS models has been remarkable, with models now capable of producing highly realistic and natural, human-like sounding speech from mere snippets of text and reference audio. Depending on limitations in training data and latency, from few-shot voice cloning with MARS-5 and MetaVoice-1B to ultra- realistic outputs from Tortoise-TTS to the cross-lingual capabilities of OpenVoice, each model offers unique capabilities suited to different applications - from virtual assistants and personas to multilingual services. As the demand for more natural and expressive speech synthesis grows, these models are pushing the boundaries of what TTS can achieve, offering personalization, efficiency, and multilingual support like never before.