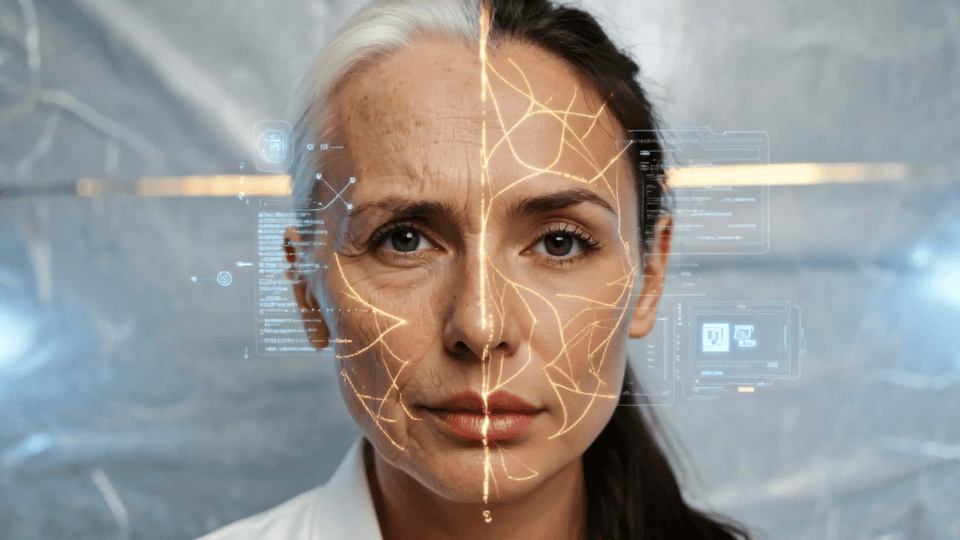

AI has become ubiquitous, shaping everything from product recommendations to medical diagnoses. Yet, despite its pervasive influence, AI often functions as an enigmatic black box, its inner workings hidden from view. This lack of transparency poses a significant challenge, particularly regarding to understanding and mitigating AI bias.

The Challenge of the AI Black Box

At the heart of this challenge lies the complexity of many modern AI models, especially those based on deep learning. Inspired by the human brain, these models learn from vast amounts of data, identifying patterns and making predictions without explicit programming. While this approach has yielded remarkable results, it has also created a labyrinthine structure that is difficult to navigate.

Consider a loan approval algorithm. It might analyze thousands of data points, ranging from credit history to social media activity, to determine loan eligibility. When the algorithm denies a loan application, the reasons for this decision often remain opaque. Was it the applicant’s zip code, a specific keyword in their social media posts, or a combination of factors? This lack of transparency hinders our ability to identify and rectify potential biases that might influence the outcome.

Why AI Explainability Matters

Bias—based on race, gender, age, or location—has long been a risk in training AI models. Moreover, AI model performance can degrade when production data differs from training data, making continuous monitoring and management of models crucial. In criminal justice, algorithms used for risk assessment might disproportionately target certain demographics. In healthcare, biased AI models could misdiagnose patients or recommend inappropriate treatments. The job market is not immune either, with AI-powered recruitment tools potentially excluding qualified candidates.

To mitigate the risks associated with AI bias, researchers and engineers are developing techniques to make these systems more transparent. This field, known as Explainable AI (XAI), aims to shed light on the decision-making process. It encompasses processes and methods that enable human users to understand and trust the outcomes generated by machine learning (ML) algorithms. It fosters end-user trust, model auditability, and productive AI use, while mitigating compliance, legal, security, and reputational risks.

Addressing AI explainability is crucial, and companies must adopt comprehensive strategies to gain AI’s full value. Research by McKinsey shows that companies attributing at least 20% of EBIT (earnings before interest and taxes) to AI are more likely to follow best practices for explainability. Additionally, organizations that build digital trust through explainable AI often see annual revenue and EBIT growth rates of 10% or more.

Techniques for Enhancing AI Transparency

XAI employs specific techniques to trace and explain each decision made during the ML process.

Prediction Accuracy: This involves running simulations and comparing XAI outputs to training data to determine accuracy.

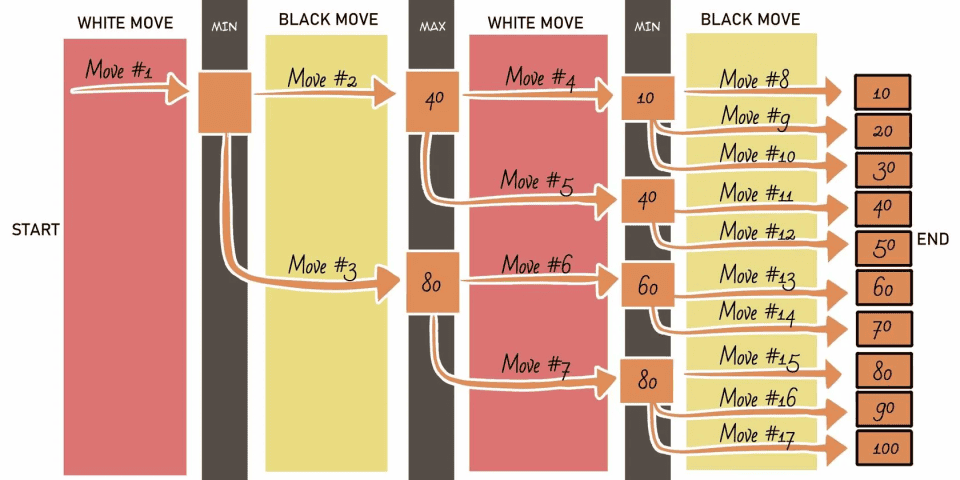

Local Interpretable Model-Agnostic Explanations (LIME) is a popular technique that explains classifier predictions by the ML algorithm. It works with any type of ML model and focuses on explaining a small, local part of the model’s function. LIME generates synthetic data points around a specific prediction, assigns weights based on their proximity to the point of interest, and uses a simple, interpretable model to approximate the complex model’s behavior in that local area. This helps in understanding which features influence the prediction for that specific instance, providing insights into the model’s decision-making process.

Traceability: This is achieved by setting narrower ML rules and features, limiting how decisions are made.

An example is DeepLIFT (Deep Learning Important Features), which is a technique for understanding how neural networks make predictions. It traces back the contribution of each neuron to the final prediction by comparing the neuron’s activity to its normal (reference) state. This helps in identifying which input features influenced the prediction. DeepLIFT can also distinguish between positive and negative contributions and can efficiently compute these scores in a single backward pass through the network.

Decision Understanding: This human-centric approach addresses the need for trust in AI. Educating teams on AI decision-making processes helps build trust and facilitates efficient collaboration with AI systems. Organizations should ensure that the training data accurately reflects the real world. The AI models should be regularly assessed for AI bias and mitigate issues. Incorporating XAI techniques to make AI systems more transparent and establishing clear ethical principles and regulations for AI development and deployment make it more balanced.

However, creating truly explainable AI is not without its challenges. Complex models, by their nature, are difficult to simplify without compromising accuracy. Moreover, understanding the root causes of AI bias often requires a deep understanding of both the technology and the social context in which it operates.

Therefore, fostering a culture of transparency and accountability within the AI community is imperative. Companies should be transparent about the algorithms they use and the data they collect. They should also be willing to share their findings and collaborate with others to develop best practices.

What are the Benefits of Explainable AI

Enhance AI Trust and Deployment: Building confidence in AI systems is essential, and this can be achieved by ensuring model interpretability and transparency. By streamlining the evaluation process, organizations can facilitate quicker deployment of AI models into production.

Accelerate AI Results: Continuously monitoring and managing AI models helps optimize business outcomes. Regular evaluation and fine-tuning of models lead to improved performance and efficiency.

Reduce Governance Risks and Costs: Maintaining transparency is crucial for meeting regulatory and compliance standards. By mitigating the risks of manual errors and unintended AI biases, organizations can reduce the need for costly manual inspections and oversight.

The journey towards explainable and unbiased AI is complex and ongoing. It requires sustained investment in research, development, and ethical considerations. By prioritizing transparency, accountability, and human-centric design, we can harness the power of AI while mitigating its risks. The future of AI depends on our ability to create systems that are not only intelligent but also trustworthy and beneficial to society as a whole.