What is AI Bias?

Human bias refers to the systematic errors in judgment or decision-making that result from subjective factors such as cultural influences, personal experiences, and societal stereotypes. It often leads individuals to make unfair or inaccurate judgments based on factors such as race, gender, or other irrelevant characteristics. Similarly, AI systems, designed by humans, can inherit and perpetuate biases due to flaws in the data used to train them or the algorithms themselves.

AI bias can manifest in various forms, such as amplifying existing societal biases present in the training data or making inaccurate predictions for certain groups based on biased patterns learned during the training process. Unaddressed bias obstructs individuals’ economic and societal engagement while limiting AI’s efficiency. Distorted outcomes from biased systems hinder businesses and breed mistrust among marginalized groups. Addressing AI bias is crucial to ensure that AI systems make fair and equitable decisions across diverse populations.

What are the Sources of AI Bias?

Societal Bias

Societal bias in AI refers to the phenomenon where biases present in society are reflected in the data used to train AI models, leading to biased outcomes or decisions. These biases can stem from various societal factors such as historical injustices, cultural stereotypes, and human prejudices such as racial, economic, ethnic and gender biases. Stereotypical bias involves making generalizations about entire groups of people based on limited information or preconceived notions. Historical biases are the remnants of past injustices and discriminatory practices such as racial injustice. Implicit associations are unconscious mental connections we make between concepts, often formed through repeated exposure and cultural conditioning like unconsciously associating a certain facial expression with dishonesty. Prejudice refers to prejudgments or preconceived opinions, often negative, towards specific groups of people. When these biases unknowingly influence the data collected or the algorithms built, the AI system starts to mimic those same biases leading to discriminatory outcomes.

Data Bias

The primary source of AI bias often lies in the underlying data. Models may be trained on data reflecting historical or societal injustices, or data that has been impacted by human decision-making.

The development of AI systems is heavily influenced by the perspectives and knowledge of their creators, as well as the values and priorities of organizational leaders. The lack of diversity in the tech industry, particularly among gender and racial minorities, significantly impacts the development of AI systems. A study found significant gender and racial disparities in the AI field, with about 80% of AI professors being male, and only 15% of AI research staff at Facebook and 10% at Google being women. Black employees are also underrepresented, comprising only 2.5% at Google and 4% at Facebook and Microsoft. This absence of diversity perpetuates narrow viewpoints and biases in data generation, collection, labeling, and algorithm design, hindering inclusive AI development.

Data bias occurs when datasets fail to adequately represent all demographic groups, particularly racial, ethnic, and gender minorities, due to historical and ongoing inequalities. Limited or absent data can exacerbate bias, as it may not encompass the entire population or target audience. Bias can also stem from skewed data gathering methods and biased selection processes, resulting in datasets that inaccurately represent the intended population. Additionally, labeling bias introduces inaccuracies due to subjective interpretations, human errors, or systemic biases in the labeling process, potentially leading to misclassified instances and biased AI outcomes.

For instance, a training dataset might lack accuracy or fail to represent a random sample from the target population, leading to sample inadequacy or selection bias. Similarly, if sample elements are drawn from an incorrect target population, developers may exhibit out-group homogeneity bias by incorrectly identifying them as similar to the target population in terms of attributes. These biases contributed to Amazon’s decision to discontinue an AI-based recruitment algorithm that unfairly treated female applicants, attributed to the limited data on female applicants in the training dataset.

Algorithmic Bias

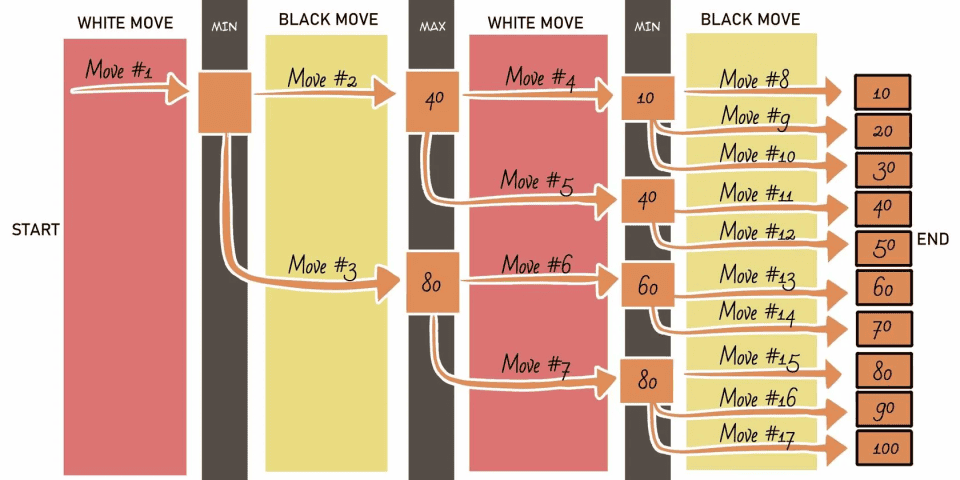

Algorithms filter information from the data to generate users their preferred content. Algorithmic bias refers to a situation where algorithmic outcomes result in biased effects that can create privilege of one arbitrary group over others. This occurs due to inaccurate modeling, which fails to capture the relationships between output variables and input features, leading to unfair treatment or adverse impacts on certain demographics.

The design and deployment of machine learning (ML) application significantly impact algorithmic bias. Methods used may mistakenly attribute correlation (how variables change together) as causation (one variable directly influencing another), leading to inaccurate conclusions. Similarly, algorithm findings might be overly generalized, lacking applicability to specific contexts. Human bias, favoring information confirming existing beliefs, can also influence algorithm design and validation.

Running ML algorithms solely to minimize error rates may overlook disparities across demographics. Variables chosen for algorithms may penalize certain identities or communities due to historical biases of the development team. Auditing algorithms is crucial for evaluating and addressing potential issues, highlighting biases introduced during coding. Failure to audit specific metrics can introduce errors, especially if evaluation focuses solely on accuracy without considering false positives across demographics or testing various conditions, potentially introducing bias.

The Australian Taxation Office’s Online Compliance Intervention, known as ‘robo-debt’, automated the verification of welfare recipients’ reported income against social security claims. Targeting vulnerable populations such as retirees, unemployed individuals, carers, people with disabilities, Indigenous Australians, migrants and refugees, etc., the system generated a surge of 20,000 debt notices per week. However, it faced criticism for mailing debt notices directly to vulnerable recipients without address cross-checking and for flawed estimation of hours worked, leading to biases in data, method, and socio-cultural aspects. The algorithm’s bias rooted from relying solely on past income records, disregarding disruptions in work hours, and estimating average rather than actual hours worked, unfairly targeting marginalized individuals and intensifying challenges for those lacking resources to respond to vague debt notices.

What are the Strategies to Mitigate AI Bias?

Organizations can foster diversity within their teams to ensure a wide range of perspectives in researching, organizing, and developing data and models, thereby promoting inclusivity of diverse ideologies, information, and principles in their AI systems. By prioritizing ethics and responsible AI use, they can cultivate a culture that values ethical considerations. Establishing corporate governance for responsible AI usage further reinforces this commitment.

To enhance the quality of AI systems, organizations should utilize comprehensive training sets and evaluate existing datasets rigorously. They should employ proper training methods and variables in algorithm development to ensure accuracy and fairness. Leaders should also prioritize investment in bias research, making data available for research purposes while upholding privacy standards.

A study introduces the concept of ‘datasheets for datasets’ that aims to provide comprehensive information about the dataset’s motivation, composition, collection process, recommended uses, potential biases, etc. By documenting these key aspects, datasheets enhance transparency, accountability, and reproducibility in ML research. Another method called ‘model cards’ provide insights into model performance metrics, intended use cases, evaluation data, and potential biases that may arise during model deployment. This helps stakeholders understand the capabilities and limitations of ML models, enabling informed decision-making and promoting responsible AI development. Factsheets extend the documentation efforts to include information about the training data, evaluation data, and model performance metrics. By providing a comprehensive overview of the entire ML process, it offers insights into the data used to train models, the metrics used to evaluate performance, and any considerations regarding fairness, accountability, and transparency.

Addressing AI bias is crucial for creating equitable and just technological systems. By fostering diversity within teams, prioritizing ethical considerations, and investing in bias research, organizations can mitigate the harmful impacts of biased algorithms. Ultimately, embracing responsible AI practices is essential for building a future where technology serves all members of society fairly and inclusively.