To complex industry specific and business function specific use cases.

We offer industry-specific use cases and demos to address your unique challenges, showing how data visualization tools can optimize data for your specific needs.

Schedule a CallWe offer industry-specific use cases and demos to address your unique challenges, showing how data visualization tools can optimize data for your specific needs.

Schedule a Call

We offer industry-specific use cases and demos to address your unique challenges, showing how data visualization tools can optimize data for your specific needs.

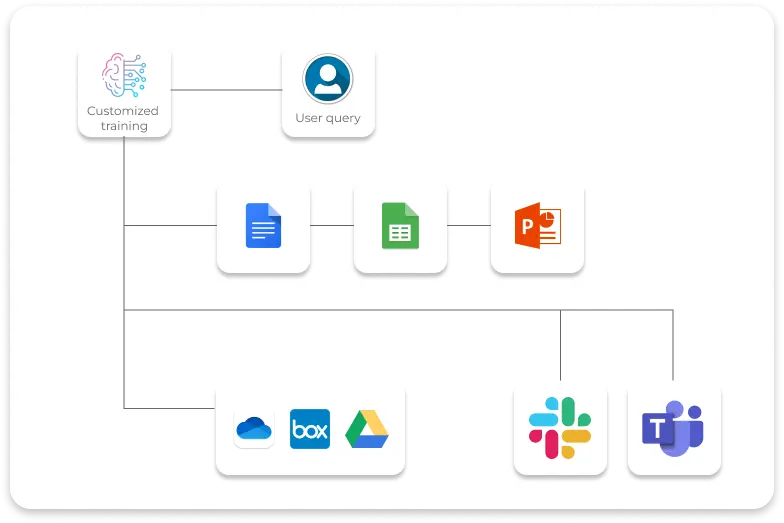

A secure chat-based platform allows employees to perform tasks, search for data, run queries, get alerts, and generate content across numerous enterprise applications. It integrates data visualization tools using generative AI, enabling users to gain deeper insights and leverage AI-driven analytics for performance evaluation.

Implement customized LLMs and select models for an efficient, cost-effective system.

Efficiently analyze vast data sets to uncover hidden insights for smarter decision-making.

Implement data security to safeguard sensitive information and prevent breaches.

Transform isolated data into semantic knowledge graphs and vector databases.

Improve organization-wide search functionality to access relevant information.

Enhance employee experience with a UX for follow-ups, summaries, and data.

Organizational Use Cases

Organizational Use Cases

Human Resources

Combining Vector Database and Knowledge Graphs

Vector databases allow for high-speed similarity searches across large datasets. They are particularly useful for tasks like semantic search, recommendation systems, and anomaly detection, enhancing business intelligence and reporting through data visualization using generative AI.

Knowledge graphs excel at revealing relationships and dependencies, which can be crucial for understanding context or the relational dynamics in data, such as hierarchical structures or associative properties.

Enrich LLMs Understanding with Semantics

RAGs enhance the understanding of LLMs by imbuing them with semantic depth. As LLMs engage with the semantic layer facilitated by RAGs, the querying process becomes more streamlined, ensuring that context and queries are aligned for accuracy.

This approach helps LLMs to access information from databases seamlessly, enhancing their ability to comprehend the intricacies of language. By integrating semantics, RAGs ensure that queries and context are perfectly aligned, improving the accuracy of LLM-generated responses. Our data visualization tool using Gen AI ensures that your enterprise data becomes actionable and insightful.

Train LLM with Enterprise Data

RAG complements the training of LLMs with enterprise data by providing structured frameworks, leveraging data visualization tools using generative AI to enable smarter decisions. RAG uses knowledge graphs and semantic retrieval to improve LLMs' understanding of enterprise-specific context, enabling them to generate more accurate and relevant responses based on the specific nuances of the enterprise domain.

This integration between RAG and enterprise data training ensures that LLMs know what's important to the organization and can provide helpful insights accordingly.

The Model Context Protocol (MCP) is an open, vendor-neutral standard for connecting AI models to external data and tools. In effect, MCP acts like a web API built for LLMs. Developers can define Resources (data endpoints) and Tools (callable functions) that the AI can access during a conversation. For example, an MCP server might expose a database as a resource or a function to query that database as a tool.

In the ever - evolving landscape of AI development, Langflow emerges as a game changer. It is an open source, Python powered framework designed to simplify the creation of multi agent and retrieval augmented generation (RAG) applications.

I’ve worked with LangChain. I’ve played with LlamaIndex. They’re great—until they aren’t.

It’s New Year’s Eve, and John, a data analyst, is finishing up a fun party with his friends. Feeling tired and eager to relax, he looks forward to unwinding. But as he checks his phone, a message from his manager pops up: “Is the dashboard ready for tomorrow’s sales meeting?” John’s heart sinks. The meeting is in less than 12 hours, and he’s barely started on the dashboard. Without thinking, he quickly types back, “Yes,” hoping he can pull it together somehow. The problem? He’s exhausted, and the thought of combing through a massive 1000-row CSV file to create graphs in Excel or Tableau feels overwhelming. Just when he starts to panic, he remembers his secret weapon: Fortune Cookie, the AI-assistant that can turn data into insightful data visualizations in no time. Relieved, John knows he doesn’t have to break a sweat. Fortune Cookie has him covered, and the dashboard will be ready in no time.

Brain rot, the 2024 Word of the Year, perfectly encapsulates the overwhelming state of mental fatigue caused by endless information overload—a challenge faced by individuals and businesses alike in today’s fast-paced digital world. At its core, this term highlights the need for streamlined systems that simplify the way we interact with data and files.

2025-06-28

2025-06-14

2025-04-28

2025-01-16

2024-12-31

2025-06-28

2025-06-14

2025-04-28

2025-01-16

2024-12-31

Experience the Power of

Data with AI Fortune Cookie

Access your AI Potential in just 15 mins!