In recent years, computer vision has transformed the fields of Augmented Reality (AR) and Virtual Reality (VR), enabling new ways for users to interact with digital environments. The AR/VR market, fueled by computer vision advancements, is projected to reach $296.9 billion by 2024, underscoring the impact of these technologies. As computer vision continues to evolve, it will create even more immersive experiences, transforming everything from how we work and learn to how we shop and socialize in virtual spaces.

An example of computer vision in AR/VR is Random Walk’s WebXR-powered AI indoor navigation system that transforms how people navigate complex buildings like malls, hotels, or offices. Addressing the common challenges of traditional maps and signage, this AR experience overlays digital directions onto the user’s real-world view via their device's camera. Users select their destination, and AR visual cues—like arrows and information markers—guide them precisely. The system uses SIFT algorithms for computer vision to detect and track distinctive features in the environment, ensuring accurate localization as users move. Accessible through web browsers, this solution offers a cost-effective, adaptable approach to real-world navigation challenges.

Core Principles of Computer Vision in Immersive Environments

The foundation of computer vision in AR and VR is built on the ability to perceive, process, and interpret visual data. Computer vision empowers AR systems to understand and interact with the surrounding real-world environment, essentially serving as their 'eyes,' while VR systems rely on computer vision to create and navigate fully virtual environments. The following are some of the core principles that underpin this technology:

Image Recognition and Feature Extraction: AR and VR systems use computer vision techniques like image recognition and feature extraction to interpret visual information. Image recognition helps identify specific objects or patterns for interactions, while feature extraction analyzes key points—such as corners, edges, and textures—to build an understanding of the environment.

Optical Flow: Optical flow is a technique used to estimate motion by analyzing how objects in an image appear to move relative to the observer as they transition from one frame to the next. This principle is crucial for VR, as it enables the smooth movement of virtual elements relative to the user’s real-world position.

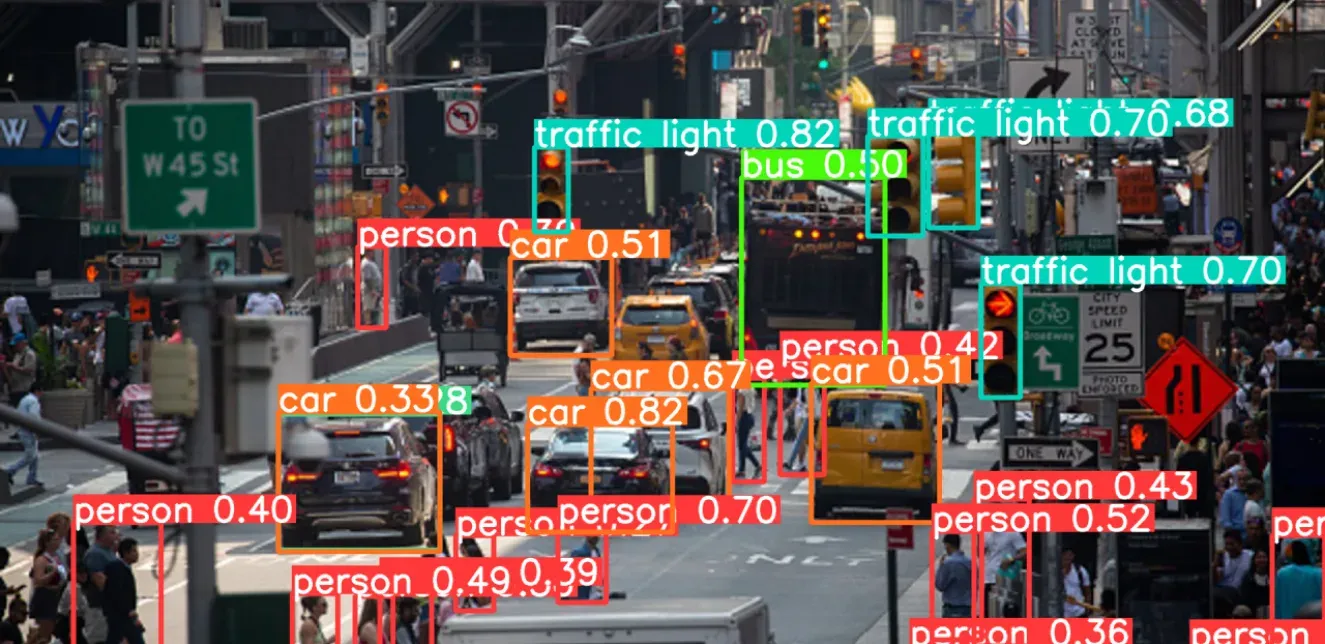

Machine Learning and AI Algorithms: Machine learning (ML) models, particularly deep learning, play a critical role in computer vision. They train on massive datasets to identify and classify images, a process integral to enabling AR and VR devices to understand and respond to real-world stimuli. AI algorithms enhance this by refining the device’s ability to detect objects and improve user interaction over time.

Real-Time Object Detection and Tracking

Marker-Based and Markerless Tracking:

Marker-Based Tracking: It relies on pre-defined markers like QR codes or specific images placed within an environment. When a device detects these markers, it uses their location as reference points to overlay digital content. This computer vision method allows AR apps, for example, to place objects accurately on specific surfaces, where users can then interact with them in relation to the marker.

CITY IN TIME is a marker-based AR app that lets users explore Hong Kong's historical and modern landscapes. Developed by the Tourism Commission and City University of Hong Kong, the app uses red pole markers with QR codes at 13 iconic locations. Scanning the codes activates immersive experiences, allowing users to switch between current views and 3D renderings of past scenes, enhanced with animations, soundscapes, and historical details. This app offers a unique insight into Hong Kong’s cultural transformation for both locals and tourists.

Markerless Tracking: Markerless tracking identifies real-world features, such as patterns or textures on objects, to anchor digital elements in the physical space. Using computer vision and ML, the device can perform object detection and can detect surfaces, or shapes directly from the environment. This enables broader applications, like identifying any flat surface to display AR graphics without physical markers, enhancing flexibility and immersion.

For example, IKEA Place is a markerless AR app that lets users visualize furniture in their homes with remarkable precision and ease. Featuring over 3,000 IKEA products, users can select, position, and interact with products in real-time, even changing colors and rotating items to fit their space. Built on Apple’s ARKit, the app scales furniture to room dimensions with 98% accuracy, showcasing textures, lighting, and shadows for an immersive experience. Users can capture and share their setups and make purchases directly from the app, transforming furniture shopping and decision-making through AR technology.

SLAM (Simultaneous Localization and Mapping):

SLAM is a key computer vision technique in AR and VR that allows systems to map environments while tracking their position in real time. By combining visual and depth data, SLAM creates and updates a 3D map as users move, ensuring virtual objects remain anchored to real-world spaces. This capability is essential for maintaining stability and spatial consistency in digital overlays.

Given below is an example of general components of a visual-based SLAM. The depth and inertial data may be added to the 2D visual input to generate a sparse map , semi-dense map, and a dense reconstruction.

For example, Google’s Project Tango exemplifies SLAM-based AR by using two cameras to detect depth and map physical spaces, allowing devices to understand and interact with their surroundings. This technology enables applications like indoor navigation by creating SLAM maps—virtual models of real-world environments that help devices distinguish between locations and navigate seamlessly.

Pose Estimation:

Pose estimation in computer vision tracks the positions and angles of objects or human bodies in 3D space. In VR, it tracks the user's head, hands, or body, enabling interactions like picking up objects or waving. By analyzing key points on the body, computer vision algorithms estimate movement and translate it into the virtual environment, enhancing interactivity and making the virtual world respond naturally to physical actions.

For instance, pose estimation-based AR in virtual fitting rooms allows users to try on clothes digitally by accurately tracking body key points and segmenting the body in real-time. Using ML models, this technology captures body parts, measures dimensions, and aligns clothing onto the user’s virtual body. AI-driven pose estimation and body segmentation in virtual fitting rooms enable realistic try-ons with personalized sizing and fit recommendations.

Depth Perception and Spatial Mapping Techniques

Depth Sensing:

Depth sensing uses sensors like LiDAR (Light Detection and Ranging) and ToF (Time-of-Flight) cameras to determine the distance between objects in real-time. By sending out infrared light or laser pulses, these sensors measure the time it takes for the signal to bounce back, giving a precise calculation of how far away an object is. This information allows devices to gauge depth, which is crucial for placing virtual objects in real space with accurate layering.

The iPhone's LiDAR sensor significantly enhances AR experiences by measuring depth with remarkable precision. For example, students studying anatomy can use AR to place accurate, life-sized 3D models of organs or skeletons in the room. LiDAR ensures the models remain fixed in place, allowing students to walk around and explore them from all angles, making learning more engaging and hands-on, even outside the classroom.

3D Reconstruction and Environmental Understanding:

3D reconstruction and environmental understanding work together in AR to create immersive experiences by accurately interpreting and modeling real-world spaces. 3D reconstruction builds a digital framework of a room or environment, allowing virtual elements to align naturally with physical surroundings. Environmental understanding then enables the system to recognize specific surfaces—like floors, tables, or walls—so that digital objects can be placed in context, enhancing realism.

In indoor navigation, AR apps using 3D reconstruction and environmental understanding help users navigate complex spaces like airports. For example, an AR app can scan the terminal to create a 3D map and provide real-time directions, such as "Turn left at Gate 12" or "Your gate is on the second floor." Environmental understanding ensures accurate placement of directions, helping users navigate easily while avoiding obstacles.

Computer vision is elevating the immersive potential of AR and VR, making environments more interactive, responsive, and lifelike through advancements in object tracking, spatial mapping, and depth perception. These capabilities enable industries to design impactful, context-rich experiences that are reshaping user engagement and interaction.

To learn more about harnessing the power of computer vision or to discuss how it can benefit your organization, visit the Random Walk website and reach out for a personalized consultation. Let’s bring your vision to life with advanced computer vision AI technology.