Spatial computing is emerging as a transformative force in digital innovation, enhancing performance by integrating virtual experiences into the physical world. While companies like Microsoft and Meta have made significant strides in this space, Apple’s launch of the Apple Vision Pro AR/VR headset signals a pivotal moment for the technology. This emerging field combines elements of augmented reality (AR), virtual reality (VR), and mixed reality (MR) with advanced sensor technologies and artificial intelligence to create a blend between the physical and digital worlds. This shift demands a new multimodal interaction paradigm and supporting infrastructure to connect data with larger physical dimensions.

What is Spatial Computing

Spatial computing is a 3D-centric computing paradigm that integrates AI, computer vision, and extended reality (XR) to blend virtual experiences into the physical world. It transforms any surface into a screen or interface, enabling seamless interactions between humans, devices, and virtual entities. By combining software, hardware, and data, spatial computing facilitates natural interactions and improves how we visualize, simulate, and engage with information. It utilizes AI, XR, IoT (Internet of Things), sensors, and more to create immersive experiences that boost productivity and creativity. XR manages spatial perception and interaction, while IoT connects devices and objects, supporting innovations like robots, drones, and virtual assistants.

Spatial computing supports innovations like robots, drones, cars, and virtual assistants, enhancing connections between humans and devices. While current AR on smartphones suggests its potential, future advancements in optics, sensor miniaturization, and 3D imaging will expand its capabilities. Wearable headsets with cameras, sensors, and new input methods like gestures and voice will offer intuitive interactions, replacing screens with an infinite canvas and enabling real-time mapping of physical environments.

The key components of spatial computing include:

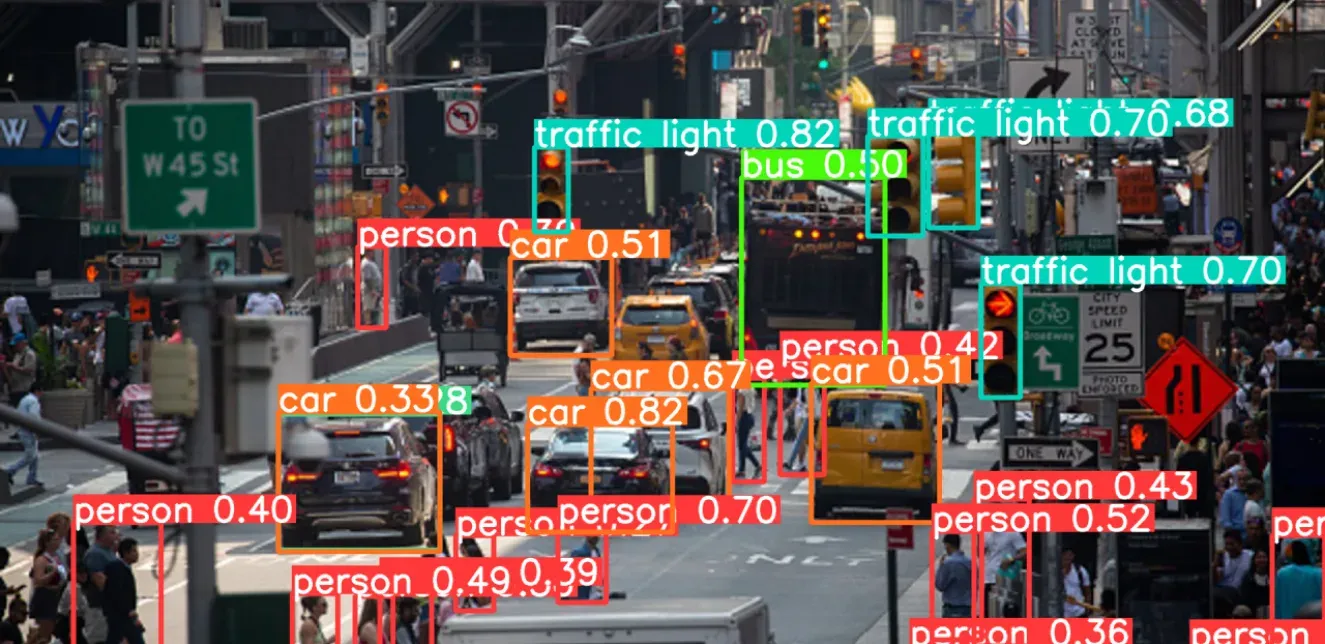

AI and Computer Vision: These technologies enable systems to process visual data and identify objects, people and spatial structures.

SLAM: Simultaneous Localization and Mapping (SLAM) enables devices to create dynamic maps of their environment while tracking their own position. This technique underpins navigation and interaction, making experiences immersive and responsive to user movements.

Gesture Recognition and Natural Language Processing (NLP): They facilitate intuitive interaction with digital overlays by interpreting gestures, movements, and spoken words into commands.

Spatial Mapping: Generating 3D models of the environment for precise placement and manipulation of digital content.

Deepening User Engagement with Immersive Experiences

The integration of spatial computing with computer vision is ushering in a new era of interactive and immersive user experiences. By understanding the user’s position and orientation in space, as well as the layout of their environment, spatial computing can create digital overlays and interfaces that feel natural and intuitive.

Gesture Recognition and Object Manipulation: AI enables natural user interactions with virtual objects through gesture recognition. By managing object occlusion and ensuring realistic placement, AI enhances the seamless blending of physical and digital worlds, making experiences more immersive.

Natural Language Processing (NLP): NLP facilitates intuitive interactions by allowing users to communicate with spatial computing systems through natural language commands. This integration supports voice-activated controls and conversational interfaces, making interactions with virtual environments more fluid and user-friendly. NLP helps in understanding user intentions and providing contextual responses, thereby enhancing the overall immersive experience.

Augmented Reality (AR): AR overlays digital information onto the real world, enriching user experiences with context-aware information and enabling spatial interactions. It supports gesture recognition, touchless interactions, and navigation, making spatial computing applications more intuitive and engaging.

Virtual Reality (VR): VR immerses users in fully computer-generated environments, facilitating applications like training simulations, virtual tours, and spatial design. It enables virtual collaboration and detailed spatial data visualization, enhancing user immersion and interaction.

Mixed Reality (MR): MR combines physical and digital elements, creating immersive mixed environments. It uses spatial anchors for accurate digital object positioning and offers hands-free interaction through gaze and gesture control, improving user engagement with spatial content.

Decentralized and Transparent Data: Block chain technology ensures the integrity and reliability of spatial data by providing a secure, decentralized ledger. This enhances user trust and control over location-based information, contributing to a more secure and engaging spatial computing experience.

For example, in the workplace, spatial computing is redefining remote collaboration. Virtual meeting spaces can now mimic the spatial dynamics of physical conference rooms, with participants represented by avatars that move and interact in three-dimensional space. This approach preserves important non-verbal cues and spatial relationships that are lost in traditional video conferencing, leading to more natural and effective remote interactions.

For creative professionals, spatial computing offers new tools for expression and design. Architects can walk clients through virtual models of buildings, adjusting designs in real-time based on feedback. Artists can sculpt digital clay in mid-air, using the precision of spatial tracking to create intricate 3D models.

Advanced 3D Mapping and Object Tracking

The core of spatial computing’s capabilities lies in its advanced 3D mapping and object tracking technologies. These systems use a combination of sensors, including depth cameras, inertial measurement units, and computer vision algorithms, to create detailed, real-time maps of the environment and track the movement of objects within it.

Scene Understanding and Mapping: AI integrates physical environments with digital information by understanding scenes, object recognition and object tracking, recognizing gestures, tracking body movements, detecting interactions, and handling object occlusions. This is achieved through computer vision, which helps create accurate 3D representations and realistic interactions within mixed reality environments. Environmental mapping techniques use SLAM to create real-time maps of the user’s surroundings. This allows virtual content to be accurately anchored and maintains spatial coherence.

Sensor Integration: IoT devices, including depth sensors, RGB cameras, infrared sensors, and LiDAR (Light Detection and Ranging), capture spatial data essential for advanced 3D mapping and object tracking. These sensors provide critical information about the user’s surroundings, supporting the creation of detailed and accurate spatial maps.

AR and VR for Mapping: AR and VR technologies utilize advanced 3D mapping and object tracking to deliver immersive spatial experiences. AR overlays spatial data onto the real world, while VR provides fully immersive environments for detailed spatial design and interaction.

Data Integrity and Provenance: Block chain technology ensures the integrity and provenance of spatial data, creating a tamper-proof record of 3D maps and tracking information. This enhances the reliability and transparency of spatial computing systems.

For example, in warehouses, spatial computing systems can guide autonomous robots through complex environments, optimizing paths and avoiding obstacles in real-time. For consumers, indoor navigation in large spaces like airports or shopping malls becomes intuitive and precise, with AR overlays providing contextual information and directions.

The construction industry benefits from spatial computing through improved site management and workplace safety monitoring. Wearable devices equipped with spatial computing capabilities can alert workers to potential hazards, provide real-time updates on project progress, and ensure that construction aligns precisely with digital blueprints.

The future of user interaction lies not in the flat screens of our current devices but in the three-dimensional space around us. As spatial computing technologies continue to evolve and mature, we can expect to see increasingly seamless integration between the digital and physical worlds, leading to more intuitive, efficient, and engaging experiences in every aspect of our lives.

Spatial computing represents more than just a new set of tools; it’s a new way of thinking about our relationship with technology and the world around us. At Random Walk, we explore and expand the boundaries of what’s possible with our AI integration services. To learn more about our visual AI services and integrate them in your workflow, contact us for a one-on-one consultation with our AI experts.