I’ve worked with LangChain. I’ve played with LlamaIndex. They’re great—until they aren’t.

At some point, I realized I was using tools that made things feel more magical than understandable. So I asked myself: what if I built an AI agent from scratch? No framework glue. No black-box abstractions. Just code, logic, and raw capability.

Here’s what happened—and what I learned along the way.

Meet the Use Case: A Restaurant Recommendation Agent

I wanted a real-world use case. So I picked something familiar yet non-trivial: a restaurant recommendation + reservation agent.

It should:

- ● Understand what users want ("table for 4 tomorrow at 7 PM")

- ● Ask clarifying questions

- ● Recommend places

- ● Book reservations

That meant building an actual AI agent—not just a chatbot.

Getting Into Agent Mode

Agents aren't just text predictors—they act, plan, and adapt. Mine needed:

- ● A brain: I used LLaMA 3.1 locally.

- ● A memory: Something to track past turns.

- ● Tools: To perform tasks like suggesting restaurants or making bookings.

- ● A controller: The logic to decide what to do next.

- ● And a UI: Even a simple terminal would do.

I wasn’t just writing code—I was designing cognition.

Building Our Restaurant Agent

Let's examine how each component is implemented in our example restaurant agent:

1. Setting Up the Model

# Using Llama 3.1-8B locally

# In main.py, we initialize the modelWhen building from scratch, you have the flexibility to choose any model and integration method:

- ● Local models (Llama, Mistral, etc.)

- ● API-based models (OpenAI, Anthropic, etc.)

- ● Custom fine-tuned models

The key is setting up a consistent interface for sending prompts and receiving responses.

2. Implementing Context Management

Maintaining conversation history is crucial for coherent interactions:

Simple context implementation

class ConversationContext:

def __init__(self, max_turns=10):

self.history = []

self.max_turns = max_turns

def add_exchange(self, user_input, agent_response):

self.history.append({"user": user_input, "agent": agent_response})

# Trim if exceeding max context

if len(self.history) > self.max_turns:

self.history = self.history[1:]

def get_formatted_context(self):

formatted = ""

for exchange in self.history:

formatted += f"User: {exchange['user']}\nAgent: {exchange['agent']}\n\n"

return formattedThis simple context manager tracks conversation turns and formats them for inclusion in prompts.

3. Defining Tools

Tools are functions that extend the agent's capabilities beyond just conversation:

def find_intent(user_input, context):

# Prompt the LLM to identify user intent

prompt = f"""

Based on the following user input and conversation context,

identify the primary intent (RECOMMEND_RESTAURANT, MAKE_RESERVATION, or GENERAL_INQUIRY):

Context: {context}

User input: {user_input}

Intent:

"""

return llm(prompt).strip()

def suggest_restaurant(preferences, context):

# Tool to recommend restaurants based on user preferences

prompt = f"""

Based on these user preferences: {preferences}

Suggest 3 restaurants with details about cuisine, price range, and seating options.

"""

return llm(prompt).strip()

def make_reservation(user_input, context):

# Multi-turn reservation tool

# Extract information or ask for missing details

required_info = ["name", "date", "time", "party_size"]

# Logic to extract or request missing information

# ...

Unlike framework-based implementations, our custom tools:

- ● Unlike framework-based implementations, our custom tools:

- ● Can be synchronous or asynchronous

- ● Are tailored exactly to our application's needs

- ● Can include complex logic like multi-turn interactions

4. Building the Controller

The controller is the orchestration layer that determines which tools to use based on user input:

class RestaurantAgent:

def __init__(self, model):

self.model = model

self.context = ConversationContext()

def process_input(self, user_input):

# Get current context

context = self.context.get_formatted_context()

# Determine intent

intent = find_intent(user_input, context)

# Select appropriate tool based on intent

if intent == "RECOMMEND_RESTAURANT":

response = suggest_restaurant(user_input, context)

elif intent == "MAKE_RESERVATION":

response = make_reservation(user_input, context)

else:

# General conversation

response = self.handle_general_inquiry(user_input, context)

# Update context

self.context.add_exchange(user_input, response)

return response

def handle_general_inquiry(self, user_input, context):

prompt = f"""

You are a helpful restaurant assistant.

Conversation history:

{context}

User: {user_input}

Assistant:

"""

return self.model(prompt).strip()

The controller follows a simple pattern:

- ● Analyze the user input to determine intent

- ● Select the appropriate tool based on intent

- ● Execute the tool and generate a response

- ● Update conversation context

5. Creating a Simple UI

The controller is the orchestration layer that determines which tools to use based on user input:

# Simple terminal-based UI example

def main():

agent = RestaurantAgent(model)

print("Restaurant Assistant: Hello! How can I help you find the perfect dining experience today?")

while True:

user_input = input("You: ")

if user_input.lower() in ["exit", "quit", "bye"]:

break

response = agent.process_input(user_input)

print(f"Restaurant Assistant: {response}")

if __name__ == "__main__":

main()

For web-based applications, you might implement a simple Flask or FastAPI backend with a JavaScript frontend.

Advantages of Building Agents from Scratch

The Hugging Face Agents Course highlights several benefits of building from scratch rather than relying on frameworks:

Challenges and Considerations

While building from scratch offers advantages, it comes with challenges:

Extending Your Agent

Once you have the basic architecture, you can enhance your agent with:

Advanced Agent Patterns

As you evolve your agent architecture, consider these advanced patterns:

ReAct: Reasoning and Acting

The ReAct pattern combines reasoning and acting in an iterative loop:

This pattern helps agents tackle complex problems by breaking them down into manageable steps and incorporating feedback at each stage.

Self-Reflection and Refinement

Advanced agents can benefit from self-reflection capabilities:

def self_reflect(agent_response, user_query, context):

prompt = f"""

Review your proposed response:

{agent_response}

Based on the user query:

{user_query}

And conversation context:

{context}

Is this response complete, accurate, and helpful?

If not, provide an improved response:

"""

return llm(prompt)

This allows agents to catch their own mistakes and improve responses before sending them to users.

Planning with Tool Composition

Complex tasks often require multiple tools used in sequence. A planning component can help:

can help:

def create_plan(user_input, available_tools, context):

prompt = f"""

Based on the user request: {user_input}

Create a step-by-step plan using these available tools:

{available_tools}

Return a JSON array of steps, each with:

- tool_name: The tool to use

- tool_input: The parameters to pass

- description: Why this step is needed

"""

plan = llm(prompt)

return json.loads(plan)This allows agents to decompose complex tasks into manageable sequences of tool calls.

Component by Component

The Model

I wrapped the LLaMA model with a basic prompt-response interface. Nothing fancy. But I controlled everything—which model, how much context, how responses were generated.

The Context Manager

I built a class to handle turn-based memory. Ten exchanges max. Formatted nicely for the model. It felt like building a short-term memory module.

Tools

This was fun. I hand-coded:

- ● A restaurant recommender

- ● A reservation tool with multi-turn logic

- ● An intent detector

Each tool took structured input and returned structured output. No YAML, no config files—just Python functions. And I could debug every line.

The Controller

This was the brainstem. It routed inputs to tools based on detected intent. If something failed? I caught the exception. If the user changed their mind? I had control over how that flowed. No middleware magic—just logic.

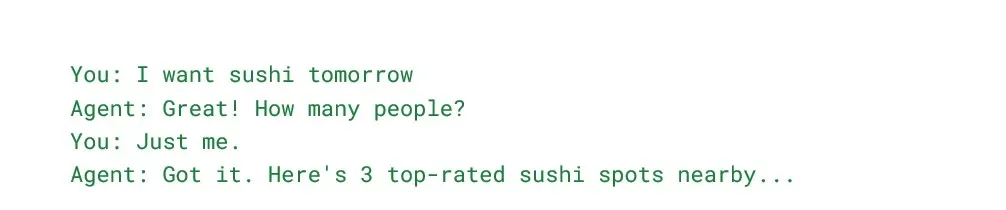

Testing It Live

I fired up a terminal and ran:

Bash

python main.pyAnd I had a conversation like this:

It remembered context. It asked clarifying questions. It made decisions.

And all of it—every step—was mine.

What I Learned

Building an agent from scratch isn’t just doable—it’s enlightening. I understood:

- ● How LLMs reason

- ● Why memory matters

- ● Where planning breaks down

- ● How tool orchestration changes everything

It gave me full flexibility to swap models, fine-tune prompts, and debug behavior. And now when I use frameworks, I understand what's happening underneath.

What’s Next?

I plan to add:

- ● Long-term memory with a vector DB

- ● Multi-modal input (menus, maps)

- ● More tools: payment, loyalty, dietary filters

- ● A web UI with streaming responses

And eventually… maybe even a multi-agent setup with specialized sub-agents. One for reservations. One for menu questions. One for loyalty programs. Why not?

Final Thoughts

Frameworks are great when you want speed. But if you want mastery? Build from scratch—at least once.

You’ll learn more in a week of raw code than a month of plug-and-play.

If you’re curious, start with something small. A travel planner. A study buddy. Or a restaurant agent, like I did.

The AI future is agentic—and now, I know how to build it from the ground up.