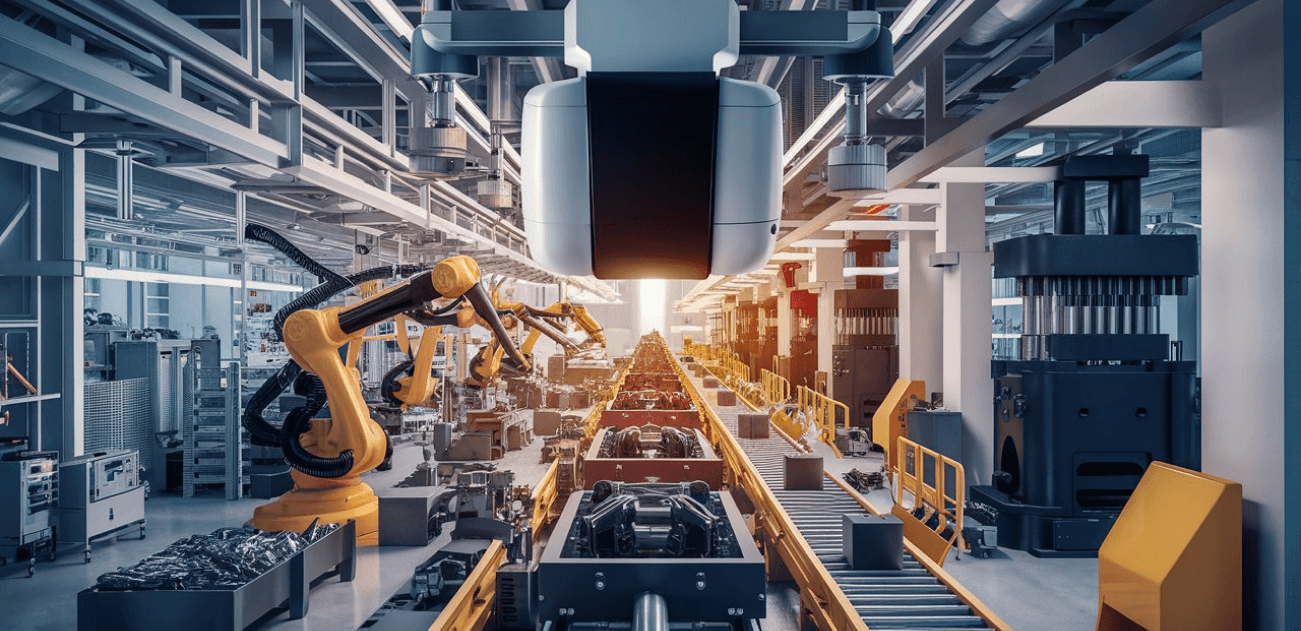

Automated assembly lines are the backbone of mass production, requiring oversight to ensure flawless output. Traditionally, this oversight relied heavily on manual inspections, which are time-consuming, prone to human error and increased costs.

Computer vision enables machines to interpret and analyze visual data, enabling them to perform tasks that were once exclusive to human perception. As businesses increasingly automate operations with technologies like computer vision and robotics, their applications are expanding rapidly. This shift is driven by the need to meet rising quality control standards in manufacturing and reducing costs.

Precision in Defect Detection and Quality Assurance using Computer Vision

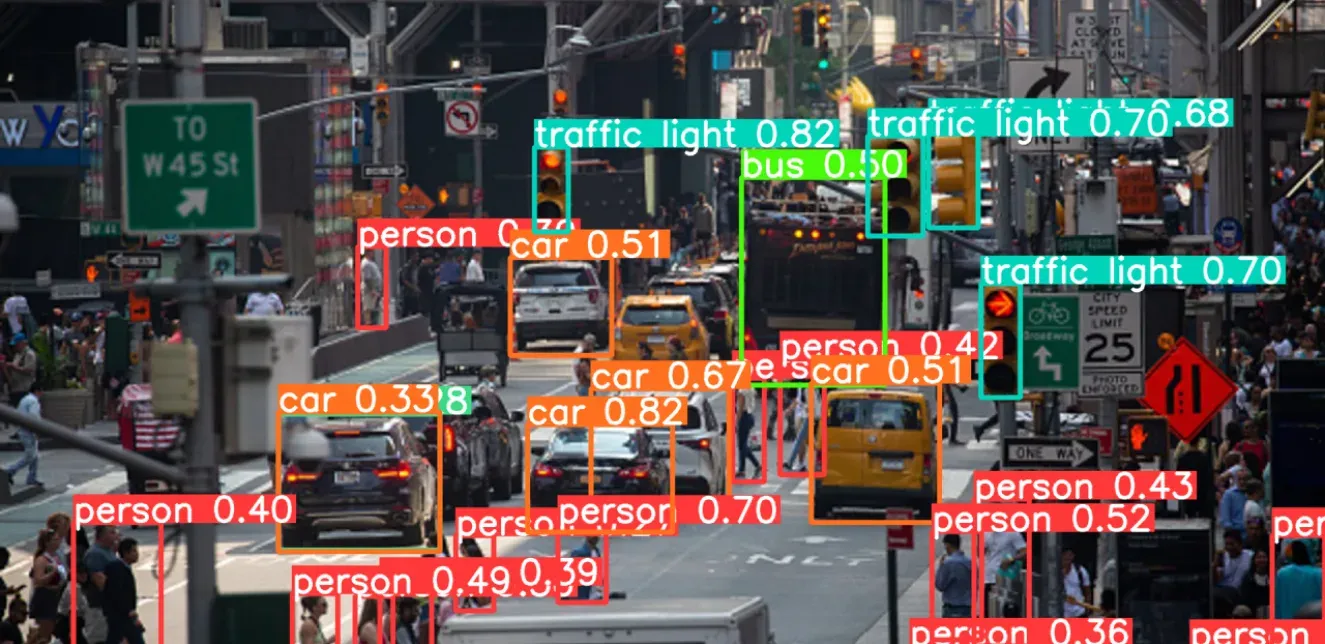

One of the primary contributions of computer vision is its ability to perform automated defect detection with precision. Advanced computer vision algorithms, like deep neural networks (CNN-based models), excel in object detection, image processing, video analytics, and data annotation. Utilizing them enable automated systems to identify even the smallest deviations from quality standards, ensuring flawless products as they leave the assembly line.

The machine learning (ML) algorithms scan items from multiple angles, match them to acceptance criteria, and save the accompanying data. This helps detect and classify production defects such as scratches, dents, low fill levels, and leaks, to recognize patterns indicative of defects. When the number of faulty items reaches a certain threshold, the system alerts the manager or inspector, or even halt production for further inspection. This automated defect detection process operates at high speed and accuracy. ML also plays a crucial role in reducing false positives by refining algorithms to distinguish minor variations within acceptable tolerances from genuine defects.

For example, detecting poor-quality materials in hardware manufacturing is a labor-intensive and error-prone manual process, often resulting in false positives. Faulty components detected at the end of the production line led to wasted labor, consumables, factory capacity, and revenue. Conversely, undetected defective parts can negatively impact customers and market perception, potentially causing irreparable damage to an organization’s reputation. To address this, a study has introduced automated defect detection using deep learning. Thier computer vision application for object detection used CNN to identify defects like scratches and cracks in milliseconds with human-level accuracy or better. It also interprets the defect area in images using heat maps, ensuring unusable products are caught before proceeding to the next production stages.

In the automotive sector, computer vision technology captures 3D images of components, detects defects, and ensures adherence to specifications. Coupled with AI algorithms, this setup enhances data collection, quality control, and automation, empowering operators to maintain bug-free assemblies. These systems oversee robotic operations, analyze camera data, and swiftly identify faults, enabling immediate corrective actions and improving product quality.

How Computer Vision Reduces Downtime with Predictive Maintenance

Intelligent automation using computer vision adjusts production parameters based on demand fluctuations, reducing waste and optimizing resource utilization. Through continuous learning and adaptation, AI transforms assembly lines into data-driven, flexible environments, ultimately increasing productivity, cutting costs, and maintaining high manufacturing standards.

Predictive maintenance with AI focuses on anticipating and preventing equipment failures by analyzing data from sensors (e.g., vibration, temperature, noise) and computer vision systems. The computer vision algorithms assess output by analyzing historical production data and real-time results. It monitors the condition of machinery in real time to detect patterns indicating wear or potential breakdowns. Its primary goal is to schedule maintenance proactively, thus reducing unplanned downtime and extending the equipment’s lifespan.

Volkswagen exemplifies the application of computer vision in manufacturing to optimize assembly lines. They use AI-driven solutions to enhance the efficiency and quality of their production processes. By analyzing sensor data from the assembly line, Volkswagen employs ML algorithms to predict maintenance needs and streamline operations.

Transforming Real-World Manufacturing Simulations with Digital Twins

ML enables highly accurate simulations by using real data to model process changes, upgrades, or new equipment. It allows for comprehensive data computation across a factory’s processes, effectively mimicking the entire production line or specific sections. Instead of conducting real experiments, data-driven simulations generated by ML provide near-perfect models that can be optimized and adjusted before implementing real-world trials.

For example, a digital twin was applied to optimize quality control in an assembly line for a model rocket assembly. The model focused on detecting assembly faults in a four-part model rocket and triggering autonomous corrections. The assembly line featured five industrial robotic arms, and an edge device connected to a programmable logic controller (PLC) for data exchange with cloud platforms. Deep learning computer vision models, such as convolutional neural networks (CNN), were utilized for image classification and segmentation. These models efficiently classified objects, identified errors in assembly, and scheduled paths for autonomous correction. This minimized the need for human interaction and disruptions to manufacturing operations. Additionally, the model aimed to achieve real-time adjustments to ensure seamless manufacturing processes.

In conclusion, the integration of computer vision into automated assembly lines significantly improves manufacturing standards by ensuring high precision in defect detection, enhancing predictive maintenance capabilities, and enabling real-time adjustments. This transformation optimizes resource utilization, reduces costs and positions manufacturers to consistently deliver high-quality products, thereby maintaining a competitive edge in the industry.

Explore the transformative potential of computer vision for your assembly line operations. Contact Random Walk today for expert AI integration services and advanced computer vision AI services customized to enhance your manufacturing processes.