Human Resources Management Systems (HRMS) often struggle with efficiently managing and retrieving valuable information from unstructured data, such as policy documents, emails, and PDFs, while ensuring the integration of structured data like employee records. This challenge limits the ability to provide contextually relevant, accurate, and easily accessible information to employees, hindering overall efficiency and knowledge management within organizations.

GuideLine is an enterprise knowledge management system dedicated to human resources management (HRMS).GuideLine is an extension of our flagship product - Fortune Cookie. GuideLine links unstructured data in the form of policy documents, PDFs and emails into a comprehensive queryable knowledge database. Structured data in the form of employee records can be combined with unstructured data to provide valuable information, specific to employees. GuideLine uses RAG to produce answers that are accurate, context aware and informative.

GuideLine integrates a voice-enabled chatbot into HRMS, thereby combining the power of automation with the natural, interactive process enabling users to directly interact and understand what different HR policies mean specifically for them. This tech not only streamlines the HR processes but enhances the employee experience, making the HRMS more efficient, accessible, and cost-effective.

Features of GuideLine

RAG enhanced GuideLine, can handle more nuanced, detailed and context-driven queries compared to traditional rule-based or FAQ-based chatbots. RAG allows the chatbot to:

Access up-to-date HR Policies: Traditional HR chatbots rely on static knowledge bases or predefined decision trees, which may become outdated as HR policies evolve. With RAG, the chatbot can dynamically retrieve information, ensuring the chatbot retrieves the most relevant and recent version of the company’s HR policies, ensuring employees get up-to-date information.

Example:

Employee: “How many public holidays are there this year and what are they?”

GuideLine:

Retrieves: The relevant section of the employee handbook and list of holidays for 2024

Generates: Based on the provided context, I can see that it mentions "Public Holidays" for which employees are entitled to 8 days each year. The list of Public Holidays is not explicitly mentioned in the query.

However, from general knowledge, I know that some of the public holidays in India include:

New Year's Day

Pongal (January or February)

Republic Day

Good Friday

Ramzan (Eid'l Fitr) - usually in April or May

Eid-Ul-Fitr (usually in June or July)

Independence Day

Gandhi Jayanti

Ayudha Pooja (October)

Deepavali (usually in October)

Personalized Employee Interactions: Unlike generic responses from regular chatbots, through API integrations, RAG allows GuideLine to tailor its responses to individual employees by retrieving personal data like their leave balance, salary details, or attendance records, thus generating a response that is specific to their query.

Example:

Employee: “How many casual leaves do I have?”

GuideLine:

Retrieves: The relevant portion of the employee handbook on leave policy

Generates: Based on the context provided by the rules about Casual Leave, you can calculate the number of Casual Leaves you are eligible for.

You start with 12 days of Casual Leave in a year. This is mentioned in rule 6.3: “Employees are eligible for 12 days of Casual Leave in a year.”

You cannot carry forward your Casual Leave to next year (rule 1), and you must use up all your Casual Leaves before they can be carried forward or clubbed with other leaves.

Given the information provided, you have used up all your Casual Leave.

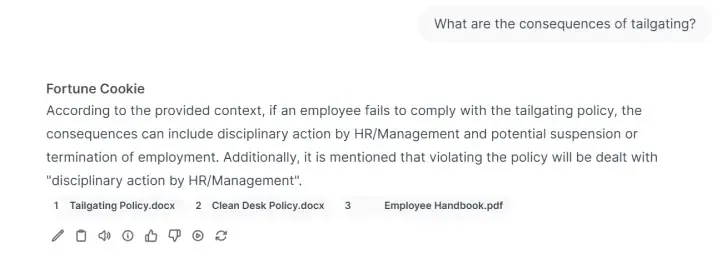

Handling Complex HR Queries: Certain HR queries require the understanding and processing of multiple documents or datasets. For example, understanding the consequences of tailgating requires understanding the meaning of tailgating followed by the company policy on penalizing those who are tailgating on a regular basis. GuideLine can retrieve multiple relevant documents like the employee handbook and the tailgating policy, integrate them, and generate a comprehensive response.

Example:

Employee: “What are the consequences of tailgating?”

GuideLine:

Retrieves: The tailgating policy and the employee handbook

Generates: According to the context, Rule 4 states: "Tailgating is strictly prohibited. Violation will be dealt with disciplinary action by HR/Management." This suggests that the consequence of tailgating is disciplinary action taken by HR and Management.

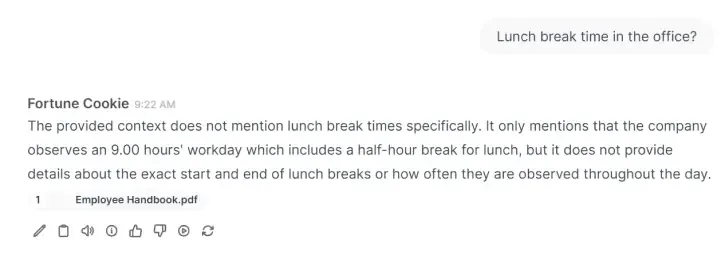

Enhanced Search Capabilities: RAG allows GuideLine to go beyond keyword matching, making the search functionality more intelligent. For instance, if an employee asks a policy-related question with complex phrasing or incomplete phrasing or a question with grammatical errors, GuideLine can still understand the user intent, and retrieve the correct document and provide the accurate response.

Example:

Employee: "Can I carry over unused vacation days to the next year?"

GuideLine:

Retrieves: The relevant sections from the company’s vacation policy.

Generates: "The rules section mentions that "Unused CL cannot be carried forward to next year".

Employee: “Lunch break time in the office?”

GuideLine:

Retrieves: The relevant sections from the company policy

Generates: The provided context does not mention lunch break times specifically. It only mentions that the company observes a 9.00 hours' workday which includes a half-hour break for lunch, but it does not provide details about the exact start and end of lunch breaks or how often they are observed throughout the day.

How RAG Works

Retriever

The retriever in a RAG model uses semantic search techniques to identify the most relevant documents from a large corpus of data. These can be documents stored in a company’s HR system, cloud storage, or even public databases. Technologies like Dense Passage Retrieval (DPR) or BM25 are commonly used to achieve this, allowing for more accurate information retrieval than traditional keyword search.

Generator

Once the relevant information is retrieved, the generator component (often powered by a model like GPT-4, Llama or other transformer-based models) synthesizes this information into a coherent, conversational response. The key here is that the generator doesn’t just parrot back information—it rephrases and contextualizes it for the user’s specific query, making the interaction more fluid and natural.

Retrieval-Generation Cycle

When GuideLine receives a query:

Query Understanding: The system first processes the query to understand its intent.

Document Retrieval: The retriever identifies the most relevant documents or sections of documents from a database.

Response Generation: The generator reads through the retrieved documents and creates a coherent response, merging the retrieved facts with a conversational tone.

Output: The chatbot delivers the final response to the user.

This cycle allows for more accurate, contextual, and up-to-date responses than a system relying solely on pre-programmed rules or FAQ-style queries.

Are you interested in exploring how GuideLine can empower your business? Randomwalk AI offers a suite of AI integration services and solutions designed to enhance enterprise communication, content creation, and data analysis. Book a consultation with us today and learn how we can help your organization unlock the power of AI.