The roar of the audience, the crack of the bat, the sea of yellow jerseys - the Indian Premier League (IPL) is an amazing spectacle. However, behind the scenes of the on-field drama, another type of high-stakes game was taking place at Chennai's Chepauk stadium during the 2025 season. Our technical team had the wonderful opportunity to work in this season of IPL with the Greater Chennai Police and the Chennai Super Kings (CSK) to reimagine stadium security by integrating cutting-edge AI with real-time monitoring. This is an account of how we transformed a great idea into a comprehensive surveillance solution.

The Spark: Creating a Proof of Concept in the Heart of the Action

Our mission began three days before the season's opening ball. We weren't simply watching; we were embedded in the Chepauk stadium's CCTV control and monitoring room. Who is our MVP? A beast of a server, powered by an RTX 4090, able to handle massive data streams. We pieced together our initial Proof of Concept (POC) with the tremendous help of the stadium staff, who quickly matched our demands for high-throughput broadbands, backup systems, and all of the essential hardware.

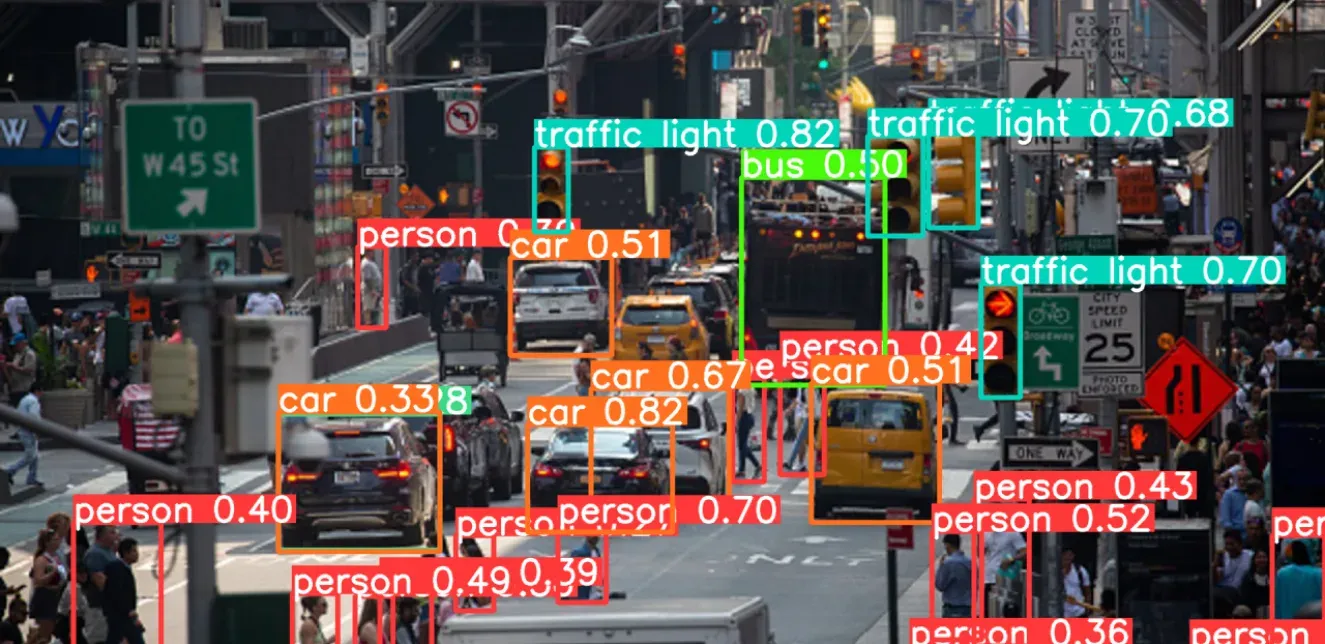

We stood on the shoulders of open-source giants like Frigate and Go2RTC. These powerful tools allowed us to process a crucial subset of camera streams efficiently. We weren't just watching; we were downsampling footage, detecting every motion, and then tracking and categorizing these movements.

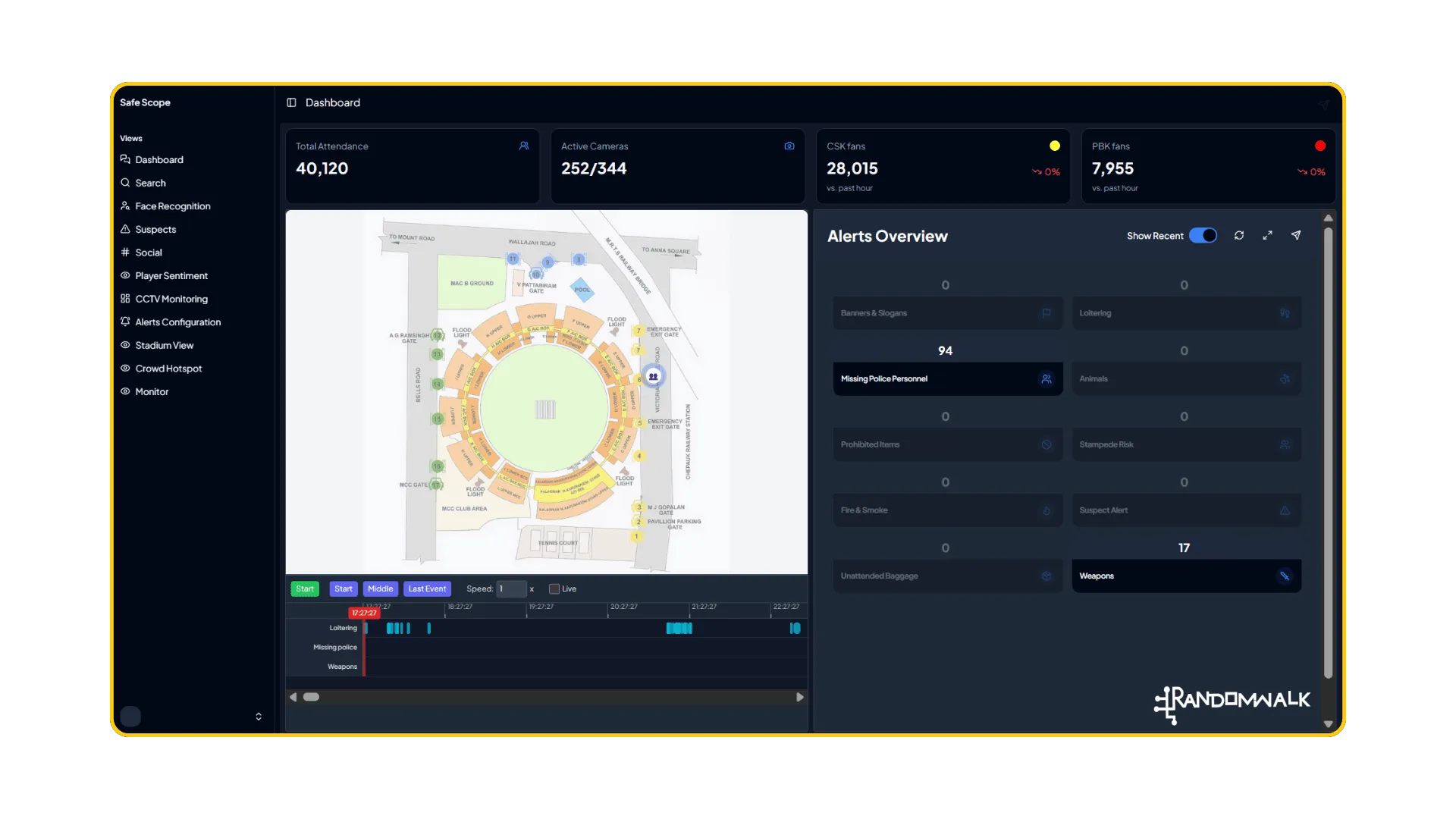

Our custom-trained labels could even identify "CSK fan," "RCB fan," or "TN police," providing granular insights into crowd dynamics. This object lifecycle data became the bedrock for calculating crowd anomalies and net headcount (influx versus exodus), offering unprecedented situational awareness from day one.

Scaling Up: Earning Trust and Expanding Capabilities

Our initial success quickly caught the attention of the high command at the Police department. Impressed by the capabilities, they entrusted us with a broader scope.Over the following weeks, we steadily enhanced our system. We introduced sophisticated motion filters and motion zones to cut through the noise, allowing us to categorize motion types more accurately – identifying loitering, potential crowd anomalies, and other critical events with greater precision.

Our system's reach extended beyond general surveillance. We delved into creating use cases for specific teams. Since we could already differentiate team support based on jersey colors, we took it a step further. By scraping Twitter for live opinions based on player name keywords, we began tracking player sentiment trends as matches unfolded, offering a fascinating new dimension to understanding the fan experience and player performance perception in real-time.

Innovation in Action: QR Codes, Cloud Scaling, and the Quest for Identity

While our AI was making great progress, we realized that automation could not be the only solution. To address potential gaps and empower the public, we created a QR-based reporting system. Linked to a Telegram bot, this allowed viewers to raise complaints instantaneously. These reports were then triaged by AI into categories and severity levels before being routed to a dedicated police team prepared to resolve issues on the spot.

As the season progressed, so did our goals. In the second part, we tackled two big challenges: expanding our system to accommodate considerably more camera feeds than our initial subset, and addressing one of the holy grails of automated surveillance: person identity tagging and tracking across several cameras.

Scaling was accomplished through a multi-pronged approach that included running several Frigate instances on distributed cloud hardware, building an efficient data aggregation gateway, and using a shared network volume, among other innovations.

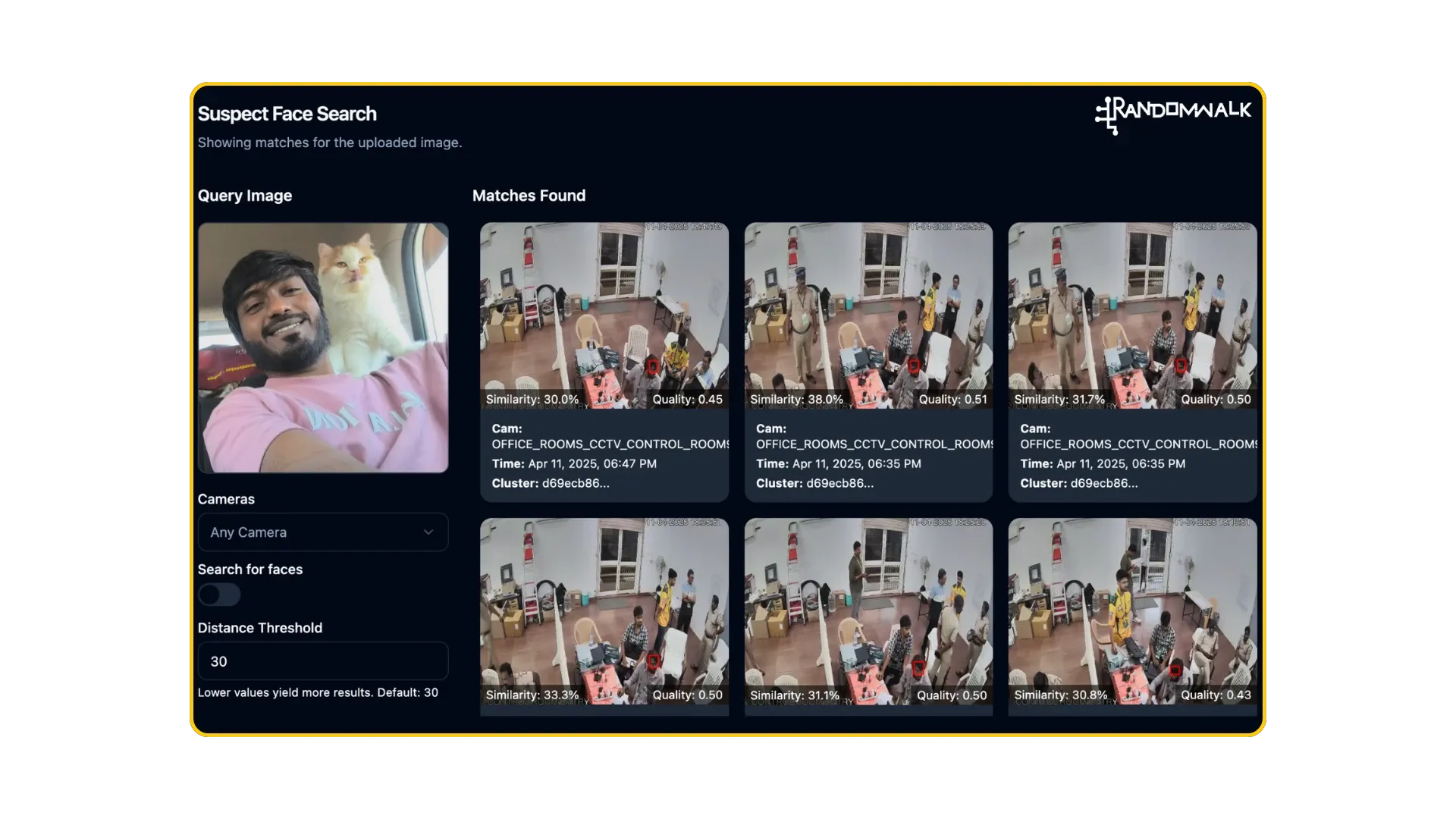

The identity challenge was significantly more complicated. An extended two-week hiatus between matches provided the critical window we required. Our team worked extensively to create a distributed microservices architecture. This system was capable of accurately detecting faces within motion detections classed as individuals. The faces were then tracked with Norfair, and the facial details were recovered as fixed-size vector embeddings with Buffalo V2. This innovation enabled a slew of new applications.

Suspicious individuals linked to anomaly warnings could now be identified. If the police provided images or sketches, we could compare these to our database of face embeddings and even general image snapshot embeddings (helpful for queries like "individual wearing all black with a trenchcoat and a fedora"). This combined capacity of matching both text and image queries enabled us to quickly retrieve relevant events and related individual identities.

The Human Element: Impact and User-Centric Design

The results of these efforts were tangible. With signals coming in directly from spectators, paired with our AI's constant monitoring and tracking, and the Police's unflinching cooperation, we helped uncover and apprehend pickpockets - a clear win for public safety (as also reported by the Indian Express: https://indianexpress.com/article/sports/ipl/ipl-2025-csk-chepauk-snatching-recovery-chennai-police-singam-app-9955863/).

However, complex backend technology is meaningless unless it is accessible and intuitive to end users. Countless overtime hours and Red Bull-fueled all-nighters (not sponsored…yet) were spent developing the user interface. We customized timeline scrubbing components, incorporated images of current team player lineups, overlaid maps with alert and camera data, and added a plethora of other visual elements. The goal was to ensure that the ultimate stakeholder, security staffs were, was not only educated, but also empowered and impressed, within the first five seconds of encounter.

The Final Whistle (For Now)

Our journey with IPL and CSK during the 2025 season was more than just an engineering project; it was a testament to the power of collaboration, innovation, and a relentless drive to solve real-world problems. From a fledgling POC to a sophisticated, multi-faceted surveillance ecosystem, we demonstrated how AI can transform security operations in complex, dynamic environments. The lessons learned and the technology developed at Chepauk have laid the groundwork for even more ambitious endeavors in the future. The game, it seems, is just getting started.