Managing thousands of distributed computing devices, each handling critical real-time data, presents a significant challenge: ensuring seamless operation, robust security, and consistent performance across the entire network. As these systems grow in scale and complexity, traditional monitoring methods often fall short, leaving organizations vulnerable to inefficiencies, security breaches, and performance bottlenecks. Edge system monitoring emerges as a transformative solution, offering real-time visibility, proactive issue detection, and enhanced security to help businesses maintain control over their distributed infrastructure.

The Edge Computing Challenge

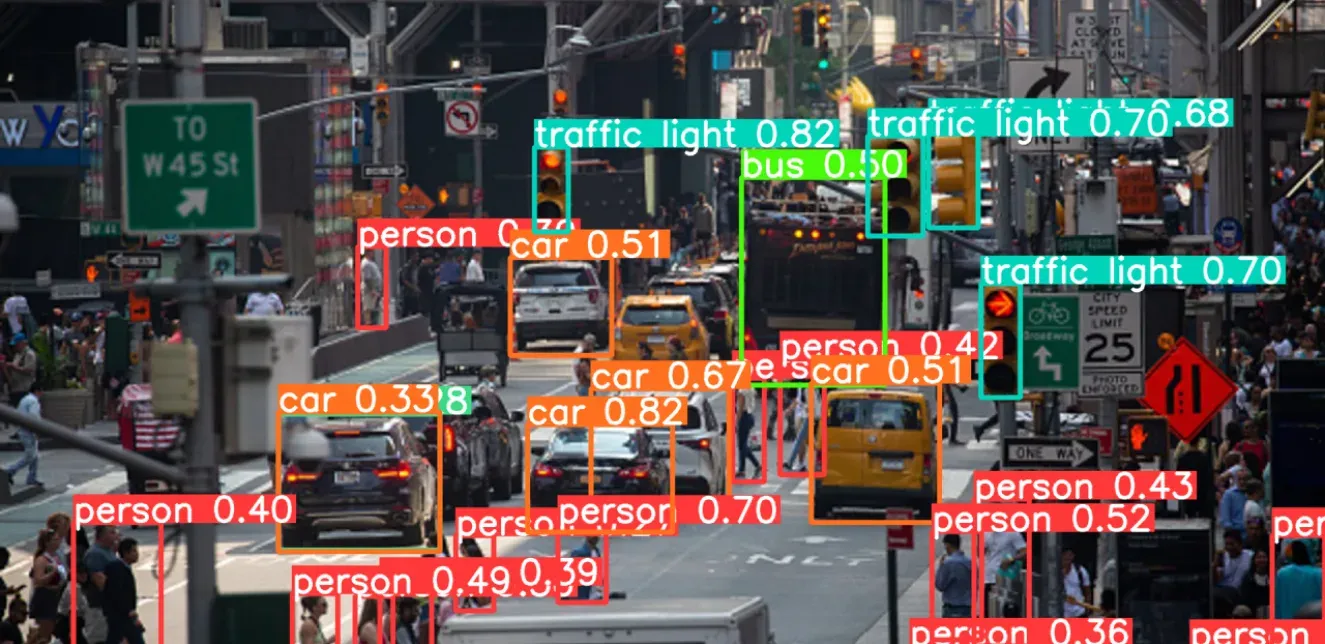

Consider the scale of modern edge deployments: a retail chain with 10,000 stores, each using smart cameras to analyze customer behavior, or a network of fuel stations processing video feeds for safety and compliance. Each location relies on edge devices running sophisticated AI models, processing data locally, and sending insights to the cloud. Managing such vast networks efficiently and reliably is no small feat.

For instance, a major metropolitan transit system recently deployed AI-enabled cameras across 500 stations to monitor passenger flow and safety. Each station houses multiple edge devices processing continuous video streams, analyzing crowd density, detecting security incidents, and managing automated responses. The system processes over 10 terabytes of data daily, all while requiring real-time monitoring and management. This example underscores the immense complexity and critical nature of edge computing in today’s interconnected world.

Key Challenges in Edge Deployments

The complexity of edge deployments brings several challenges that organizations must address:

Manual Deployment of Updates: Traditional methods often require on-site visits for updates, leading to significant operational overhead. For example, a retail chain with 1,000 locations might need three months and substantial resources just to complete a system-wide update.

Delayed Detection of System Failures: Late identification of issues can result in critical service interruptions. In a manufacturing plant, where edge devices monitor production line quality, even a 30-minute delay in detecting a malfunctioning sensor could lead to thousands of defective products.

Inconsistent Performance Across Locations: Variability in performance can affect service quality and user experience. This is particularly evident in applications like digital signage networks, where content delivery must be synchronized and smooth across all endpoints.

Building an Effective Edge Monitoring Solution

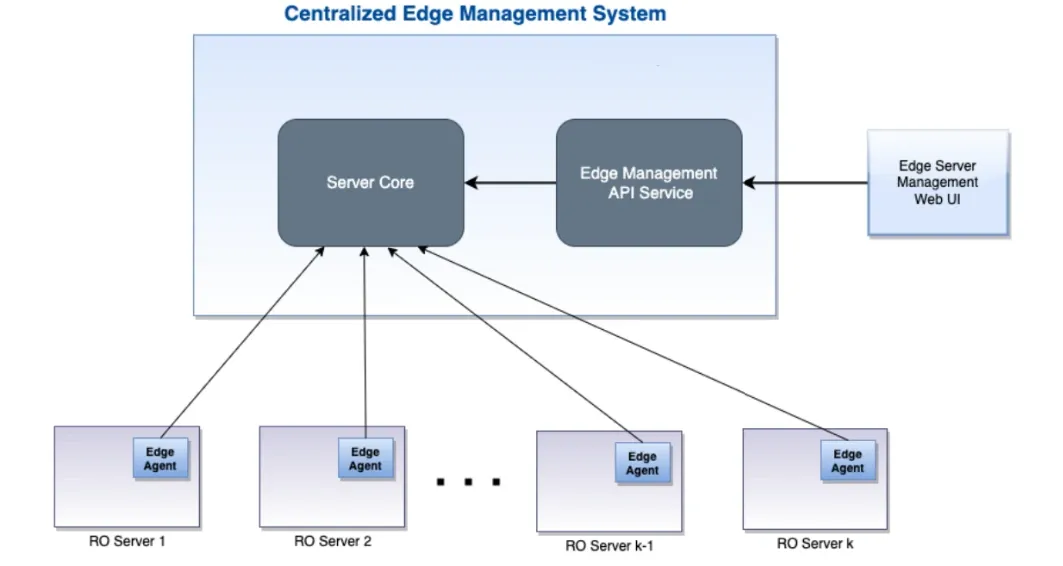

The core of an effective edge monitoring system consists of four integrated components working in harmony.

Edge Server Management Web UI

The Edge Server Management Web UI serves as the command center, providing administrators with a comprehensive dashboard for monitoring and controlling the entire infrastructure.

Edge Management API Service

The Edge Management API Service acts as the crucial intermediary layer, handling all communications between the web interface and the Server Core.

Server Core

The Server Core functions as the central brain of the system, managing all communication with distributed edge agents. The Server Core orchestrated simultaneous updates across all stores during off-peak hours, reducing deployment time from months to days.

Edge Agents (on RO Servers)

Edge Agents, installed on each Remote Operation Server, establish bidirectional communication with the Server Core. For example, these lightweight components proved their worth in a recent manufacturing deployment, where they detected and automatically resolved 90% of potential issues before they could impact production.

How Edge System Monitoring Works

Edge system monitoring operates through a well-defined control and monitoring framework, ensuring efficient management of distributed devices. Here’s how it works:

Control Flow:

- ● Administrators interact with the Web UI.

- ● Commands flow through API Service → Server Core → Edge Agents.

- ● Edge Agents execute commands and report back through the same path, ensuring real-time updates.

Monitoring Flow:

- ● Edge Agents continuously gather local metrics (e.g., performance, health, and security data).

- ● Collected data is sent to the Server Core for aggregation and storage.

- ● Processed information is displayed in the Web UI via the API Service, providing actionable insights.

Deployment Process:

- ● Updates are initiated from the Web UI.

- ● Server Core coordinates deployment across selected edge devices.

- ● Edge Agents handle local installation and verification, ensuring seamless updates.

Failure Handling:

- ● Edge Agents detect local failures or anomalies.

- ● Server Core receives alerts and initiates recovery procedures.

- ● System status is updated in real-time on the Web UI.

Implementation and Performance Optimization of Edge Monitoring System

Implementing edge monitoring requires a focus on performance optimization, security, and advanced monitoring capabilities. Here’s how these elements come together:

Intelligent Resource Allocation

- ● Resources are allocated dynamically across edge devices based on real-time demand.

- ● Machine learning algorithms predict resource needs, optimizing performance proactively.

- ● Real-time capacity planning ensures the system scales seamlessly with growing demands.

Security frameworks form another crucial component of edge monitoring systems. For instance, a regional bank's edge computing network can demonstrate the effectiveness of:

Zero Trust Architecture Integration

- ● Continuous authentication and authorization checks ensure only trusted devices and users access the network.

- ● Cryptographic validation secures communications between edge agents and the core.

- ● Automated enforcement of security policies minimizes vulnerabilities.

Advanced Monitoring Capabilities

The implementation of advanced monitoring capabilities has transformed edge computing management. For example, a large urban transit system's monitoring infrastructure demonstrates the power of these capabilities.

- ● The alert management system employs a sophisticated multi-level approach to issue detection and response.

- ● Critical alerts, triggered by sustained high CPU usage, memory constraints, or security breach attempts, initiate immediate response protocols. In practice, this system has achieved 99.99% system uptime, ensuring uninterrupted operations and

under 5-second alert notification times, minimizing downtime and risks.

Edge system monitoring has become essential in today's distributed computing landscape. Whether managing a handful of devices or thousands of distributed systems, the right monitoring solution transforms edge operations from a complex challenge into a strategic advantage. Through careful implementation of these monitoring principles, organizations can ensure optimal performance while minimizing costs and maintenance overhead.

The examples highlighted throughout this blog demonstrate that effective edge monitoring isn't just about technology – it's about enabling businesses to scale their edge AI operations efficiently and reliably. As edge computing continues to evolve, robust monitoring solutions will remain the backbone of successful deployments.

At Random Walk, we specialize in delivering advanced AI-powered monitoring solutions tailored to your unique needs. From real-time performance optimization to advanced security frameworks, our tools empower businesses to achieve the full potential of edge computing.